Synthetic human-like fakes: Difference between revisions

Juho Kunsola (talk | contribs) →Timeline of synthetic human-like fakes: applying (rough) reverse chronological order pass 1/2 |

Juho Kunsola (talk | contribs) →Timeline of synthetic human-like fakes: reverse chronological order, pass 2/2 (decades) |

||

| Line 240: | Line 240: | ||

== Timeline of synthetic human-like fakes == | == Timeline of synthetic human-like fakes == | ||

=== | === 2020's synthetic human-like fakes === | ||

[[File: | [[File:Marc Berman.jpg|thumb|120px|left|Homie [[w:Marc Berman|Marc Berman]], a righteous fighter for our human rights in this age of industrial disinformation filth and a member of the [[w:California State Assembly]], most loved for authoring [https://leginfo.legislature.ca.gov/faces/billTextClient.xhtml?bill_id=201920200AB602 AB-602], which came into effect on Jan 1 2020, banning both the manufacturing and [[w:Digital distribution|distribution]] of synthetic pornography without the [[w:consent]] of the people depicted.]] | ||

{{#ev:youtube|LWLadJFI8Pk|640px|right|''In Event of Moon Disaster - FULL FILM'' (2020) by the [https://moondisaster.org/ moondisaster.org] project by the [https://virtuality.mit.edu/ Center for Advanced Virtuality] of the [[w:Massachusetts Institute of Technology|MIT]]}} | |||

* ''' | * '''2020''' | demonstration | [https://www.youtube.com/watch?v=LWLadJFI8Pk ''In Event of Moon Disaster - FULL FILM'' at youtube.com] by the [https://moondisaster.org/ moondisaster.org] project by the [https://virtuality.mit.edu/ Center for Advanced Virtuality] of the [[w:Massachusetts Institute of Technology|MIT]] makes a synthetic human-like fake in the appearance and almost in the sound of Nixon. | ||

[ | ** [https://www.cnet.com/news/mit-releases-deepfake-video-of-nixon-announcing-nasa-apollo-11-disaster/ Cnet.com July 2020 reporting ''MIT releases deepfake video of 'Nixon' announcing NASA Apollo 11 disaster''] | ||

* '''2020''' | US state law | January 1 <ref name="KFI2019"> | |||

{{cite web | |||

|url= https://kfiam640.iheart.com/content/2019-12-30-here-are-the-new-california-laws-going-into-effect-in-2020/ | |||

|title= Here Are the New California Laws Going Into Effect in 2020 | |||

|last= Johnson | |||

|first= R.J. | |||

|date= 2019-12-30 | |||

|website= [[KFI]] | |||

|publisher= [[iHeartMedia]] | |||

|access-date= 2020-07-13 | |||

|quote=}} | |||

</ref> the [[w:California]] [[w:State law (United States)|state law]] [https://leginfo.legislature.ca.gov/faces/billTextClient.xhtml?bill_id=201920200AB602 AB-602] came into effect banning the manufacturing and [[w:Digital distribution|distribution]] of synthetic pornography without the [[w:consent]] of the people depicted. AB-602 provides victims of synthetic pornography with [[w:injunction|injunctive relief]] and poses legal threats of [[w:statutory damages|statutory]] and [[w:punitive damages]] on [[w:criminal]]s making or distributing synthetic pornography without consent. The bill AB-602 was signed into law by California [[w:Governor (United States)|Governor]] [[w:Gavin Newsom]] on October 3 2019 and was authored by [[w:California State Assembly]] member [[w:Marc Berman]].<ref name="CNET2019"> | |||

{{cite web | |||

| last = Mihalcik | |||

| first = Carrie | |||

| title = California laws seek to crack down on deepfakes in politics and porn | |||

| website = [[w:cnet.com]] | |||

| publisher = [[w:CNET]] | |||

| date = 2019-10-04 | |||

| url = https://www.cnet.com/news/california-laws-seek-to-crack-down-on-deepfakes-in-politics-and-porn/ | |||

| access-date = 2020-07-13 | |||

}} | |||

</ref> | |||

* '''2020''' | Chinese legislation | On January 1 Chinese law requiring that synthetically faked footage should bear a clear notice about its fakeness came into effect. Failure to comply could be considered a [[w:crime]] the [[w:Cyberspace Administration of China]] stated on its website. China announced this new law in November 2019.<ref name="Reuters2019"> | |||

{{cite web | |||

| url = https://www.reuters.com/article/us-china-technology/china-seeks-to-root-out-fake-news-and-deepfakes-with-new-online-content-rules-idUSKBN1Y30VU | |||

| title = China seeks to root out fake news and deepfakes with new online content rules | |||

| last = | |||

| first = | |||

| date = 2019-11-29 | |||

| website = [[w:Reuters.com]] | |||

| publisher = [[w:Reuters]] | |||

| access-date = 2020-07-13 | |||

| quote = }} | |||

</ref> The Chinese government seems to be reserving the right to prosecute both users and [[w:online video platform]]s failing to abide by the rules. <ref name="TheVerge2019"> | |||

{{cite web | |||

| url = https://www.theverge.com/2019/11/29/20988363/china-deepfakes-ban-internet-rules-fake-news-disclosure-virtual-reality | |||

| title = China makes it a criminal offense to publish deepfakes or fake news without disclosure | |||

| last = Statt | |||

| first = Nick | |||

| date = 2019-11-29 | |||

| website = | |||

| publisher = [[w:The Verge]] | |||

| access-date = 2020-07-13 | |||

| quote = }} | |||

</ref> | </ref> | ||

=== 2010's synthetic human-like fakes === | |||

* ''' | * '''2019''' | demonstration | In September 2019 [[w:Yle]], the Finnish [[w:public broadcasting company]], aired a result of experimental [[w:journalism]], [https://yle.fi/uutiset/3-10955498 a deepfake] of the President in office [[w:Sauli Niinistö]] in its main news broadcast for the purpose of highlighting the advancing disinformation technology and problems that arise from it. | ||

* '''2019''' | US state law | Since September 1 [[w:Texas]] senate bill [https://capitol.texas.gov/tlodocs/86R/billtext/html/SB00751F.htm SB 751] [[w:amendment]]s to the election code came into effect, giving [[w:candidates]] in [[w:elections]] a 30-day protection period to the elections during which making and distributing digital look-alikes or synthetic fakes of the candidates is an offense. The law text defines the subject of the law as "''a video, created with the intent to deceive, that appears to depict a real person performing an action that did not occur in reality''"<ref name="TexasSB751"> | * '''2019''' | US state law | Since September 1 [[w:Texas]] senate bill [https://capitol.texas.gov/tlodocs/86R/billtext/html/SB00751F.htm SB 751] [[w:amendment]]s to the election code came into effect, giving [[w:candidates]] in [[w:elections]] a 30-day protection period to the elections during which making and distributing digital look-alikes or synthetic fakes of the candidates is an offense. The law text defines the subject of the law as "''a video, created with the intent to deceive, that appears to depict a real person performing an action that did not occur in reality''"<ref name="TexasSB751"> | ||

| Line 334: | Line 343: | ||

</ref>, as [https://law.lis.virginia.gov/vacode/18.2-386.2/ § 18.2-386.2 titled 'Unlawful dissemination or sale of images of another; penalty.'] became part of the [[w:Code of Virginia]]. The law text states: "''Any person who, with the [[w:Intention (criminal law)|intent]] to [[w:coercion|coerce]], [[w:harassment|harass]], or [[w:intimidation|intimidate]], [[w:Malice_(law)|malicious]]ly [[w:dissemination|disseminates]] or [[w:sales|sells]] any videographic or still image created by any means whatsoever that depicts another person who is totally [w:[nudity|nude]], or in a state of undress so as to expose the [[w:sex organs|genitals]], pubic area, [[w:buttocks]], or female [[w:breast]], where such person knows or has reason to know that he is not [[w:license]]d or [[w:authorization|authorized]] to disseminate or sell such videographic or still image is guilty of a Class 1 [[w:Misdemeanor#United States|misdemeanor]].''".<ref name="Virginia2019Chapter515"/> The identical bills were [https://lis.virginia.gov/cgi-bin/legp604.exe?191+sum+HB2678 House Bill 2678] presented by [[w:Delegate (American politics)|Delegate]] [[w:Marcus Simon]] to the [[w:Virginia House of Delegates]] on January 14 2019 and three day later an identical [https://lis.virginia.gov/cgi-bin/legp604.exe?191+sum+SB1736 Senate bill 1736] was introduced to the [[w:Senate of Virginia]] by Senator [[w:Adam Ebbin]]. | </ref>, as [https://law.lis.virginia.gov/vacode/18.2-386.2/ § 18.2-386.2 titled 'Unlawful dissemination or sale of images of another; penalty.'] became part of the [[w:Code of Virginia]]. The law text states: "''Any person who, with the [[w:Intention (criminal law)|intent]] to [[w:coercion|coerce]], [[w:harassment|harass]], or [[w:intimidation|intimidate]], [[w:Malice_(law)|malicious]]ly [[w:dissemination|disseminates]] or [[w:sales|sells]] any videographic or still image created by any means whatsoever that depicts another person who is totally [w:[nudity|nude]], or in a state of undress so as to expose the [[w:sex organs|genitals]], pubic area, [[w:buttocks]], or female [[w:breast]], where such person knows or has reason to know that he is not [[w:license]]d or [[w:authorization|authorized]] to disseminate or sell such videographic or still image is guilty of a Class 1 [[w:Misdemeanor#United States|misdemeanor]].''".<ref name="Virginia2019Chapter515"/> The identical bills were [https://lis.virginia.gov/cgi-bin/legp604.exe?191+sum+HB2678 House Bill 2678] presented by [[w:Delegate (American politics)|Delegate]] [[w:Marcus Simon]] to the [[w:Virginia House of Delegates]] on January 14 2019 and three day later an identical [https://lis.virginia.gov/cgi-bin/legp604.exe?191+sum+SB1736 Senate bill 1736] was introduced to the [[w:Senate of Virginia]] by Senator [[w:Adam Ebbin]]. | ||

* '''2019''' | science and demonstration | [https://arxiv.org/pdf/1905.09773.pdf ''''Speech2Face: Learning the Face Behind a Voice'''' at arXiv.org] a system for generating likely facial features based on the voice of a person, presented by the [[w:MIT Computer Science and Artificial Intelligence Laboratory]] at the 2019 [[w:Conference on Computer Vision and Pattern Recognition|CVPR]]. [https://github.com/saiteja-talluri/Speech2Face Speech2Face at github.com] This may develop to something that really causes problems. [https://neurohive.io/en/news/speech2face-neural-network-predicts-the-face-behind-a-voice/ "Speech2Face: Neural Network Predicts the Face Behind a Voice" reporing at neurohive.io], [https://belitsoft.com/speech-recognition-software-development/speech2face "Speech2Face Sees Voices and Hears Faces: Dreams Come True with AI" reporting at belitsoft.com] | * '''2019''' | science and demonstration | [https://arxiv.org/pdf/1905.09773.pdf ''''Speech2Face: Learning the Face Behind a Voice'''' at arXiv.org] a system for generating likely facial features based on the voice of a person, presented by the [[w:MIT Computer Science and Artificial Intelligence Laboratory]] at the 2019 [[w:Conference on Computer Vision and Pattern Recognition|CVPR]]. [https://github.com/saiteja-talluri/Speech2Face Speech2Face at github.com] This may develop to something that really causes problems. [https://neurohive.io/en/news/speech2face-neural-network-predicts-the-face-behind-a-voice/ "Speech2Face: Neural Network Predicts the Face Behind a Voice" reporing at neurohive.io], [https://belitsoft.com/speech-recognition-software-development/speech2face "Speech2Face Sees Voices and Hears Faces: Dreams Come True with AI" reporting at belitsoft.com] | ||

| Line 355: | Line 362: | ||

|website= [[Medium.com]] | |website= [[Medium.com]] | ||

|access-date= 2020-07-13 | |access-date= 2020-07-13 | ||

}} | }} | ||

</ref> | |||

* '''<font color="red">2018</font>''' | '''<font color="red">counter-measure</font>''' | In September 2018 Google added “'''involuntary synthetic pornographic imagery'''” to its '''ban list''', allowing anyone to request the search engine block results that falsely depict them as “nude or in a sexually explicit situation.”<ref name="WashingtonPost2018"> | * '''<font color="red">2018</font>''' | '''<font color="red">counter-measure</font>''' | In September 2018 Google added “'''involuntary synthetic pornographic imagery'''” to its '''ban list''', allowing anyone to request the search engine block results that falsely depict them as “nude or in a sexually explicit situation.”<ref name="WashingtonPost2018"> | ||

| Line 399: | Line 407: | ||

| quote = }} | | quote = }} | ||

</ref> Neither the [[w:speech synthesis]] used nor the gesturing of the digital look-alike anchors were good enough to deceive the watcher to mistake them for real humans imaged with a TV camera. | </ref> Neither the [[w:speech synthesis]] used nor the gesturing of the digital look-alike anchors were good enough to deceive the watcher to mistake them for real humans imaged with a TV camera. | ||

* '''2018''' | controversy / demonstration | The [[w:deepfake]]s controversy surfaces where [[w:Pornographic film|porn video]]s were doctored utilizing [[w:deep learning|deep machine learning]] so that the face of the actress was replaced by the software's opinion of what another persons face would look like in the same pose and lighting. | * '''2018''' | controversy / demonstration | The [[w:deepfake]]s controversy surfaces where [[w:Pornographic film|porn video]]s were doctored utilizing [[w:deep learning|deep machine learning]] so that the face of the actress was replaced by the software's opinion of what another persons face would look like in the same pose and lighting. | ||

| Line 424: | Line 431: | ||

* '''<font color="red">2016</font>''' | <font color="red">science</font> and demonstration | '''[[w:Adobe Inc.]]''' publicly demonstrates '''[[w:Adobe Voco]]''', a '''sound-like-anyone machine''' [https://www.youtube.com/watch?v=I3l4XLZ59iw '#VoCo. Adobe Audio Manipulator Sneak Peak with Jordan Peele | Adobe Creative Cloud' on Youtube]. THe original Adobe Voco required '''20 minutes''' of sample '''to thieve a voice'''. <font color="green">'''Relevancy: certain'''</font>. | * '''<font color="red">2016</font>''' | <font color="red">science</font> and demonstration | '''[[w:Adobe Inc.]]''' publicly demonstrates '''[[w:Adobe Voco]]''', a '''sound-like-anyone machine''' [https://www.youtube.com/watch?v=I3l4XLZ59iw '#VoCo. Adobe Audio Manipulator Sneak Peak with Jordan Peele | Adobe Creative Cloud' on Youtube]. THe original Adobe Voco required '''20 minutes''' of sample '''to thieve a voice'''. <font color="green">'''Relevancy: certain'''</font>. | ||

* '''2016''' | science | '''[http://www.niessnerlab.org/projects/thies2016face.html 'Face2Face: Real-time Face Capture and Reenactment of RGB Videos' at Niessnerlab.org]''' A paper (with videos) on the semi-real-time 2D video manipulation with gesture forcing and lip sync forcing synthesis by Thies et al, Stanford. <font color="green">'''Relevancy: certain'''</font> | * '''2016''' | science | '''[http://www.niessnerlab.org/projects/thies2016face.html 'Face2Face: Real-time Face Capture and Reenactment of RGB Videos' at Niessnerlab.org]''' A paper (with videos) on the semi-real-time 2D video manipulation with gesture forcing and lip sync forcing synthesis by Thies et al, Stanford. <font color="green">'''Relevancy: certain'''</font> | ||

* '''2015''' | movie | In the ''[[w:Furious 7]]'' a digital look-alike made of the actor [[w:Paul Walker]] who died in an accident during the filming was done by [[w:Weta Digital]] to enable the completion of the film.<ref name="thr2015"> | * '''2015''' | movie | In the ''[[w:Furious 7]]'' a digital look-alike made of the actor [[w:Paul Walker]] who died in an accident during the filming was done by [[w:Weta Digital]] to enable the completion of the film.<ref name="thr2015"> | ||

| Line 441: | Line 445: | ||

| quote = }} | | quote = }} | ||

</ref> | </ref> | ||

* '''2014''' | science | [[w:Ian Goodfellow]] et al. presented the principles of a [[w:generative adversarial network]]. GANs made the headlines in early 2018 with the [[w:deepfake]]s controversies. | * '''2014''' | science | [[w:Ian Goodfellow]] et al. presented the principles of a [[w:generative adversarial network]]. GANs made the headlines in early 2018 with the [[w:deepfake]]s controversies. | ||

| Line 470: | Line 473: | ||

* '''2013''' | demonstration | A '''[https://ict.usc.edu/pubs/Scanning%20and%20Printing%20a%203D%20Portrait%20of%20President%20Barack%20Obama.pdf 'Scanning and Printing a 3D Portrait of President Barack Obama' at ict.usc.edu]'''. A 7D model and a 3D bust was made of President Obama with his consent. Relevancy: <font color="green">'''Relevancy: certain'''</font> | * '''2013''' | demonstration | A '''[https://ict.usc.edu/pubs/Scanning%20and%20Printing%20a%203D%20Portrait%20of%20President%20Barack%20Obama.pdf 'Scanning and Printing a 3D Portrait of President Barack Obama' at ict.usc.edu]'''. A 7D model and a 3D bust was made of President Obama with his consent. Relevancy: <font color="green">'''Relevancy: certain'''</font> | ||

=== | === 2000's synthetic human-like fakes === | ||

[[File:The-matrix-logo.svg|thumb|right|300px|Logo of the [[w:The Matrix (franchise)]]]] | |||

* '''2010''' | movie | [[w:Walt Disney Pictures]] released a sci-fi sequel entitled ''[[w:Tron: Legacy]]'' with a digitally rejuvenated digital look-alike made of the actor [[w:Jeff Bridges]] playing the [[w:antagonist]] [[w:List of Tron characters#CLU|CLU]]. | |||

* '''2009''' | movie | A digital look-alike of a younger [[w:Arnold Schwarzenegger]] was made for the movie ''[[w:Terminator Salvation]]'' though the end result was critiqued as unconvincing. Facial geometry was acquired from a 1984 mold of Schwarzenegger. | |||

* '''2009''' | demonstration | [http://www.ted.com/talks/paul_debevec_animates_a_photo_real_digital_face.html Paul Debevec: ''''Animating a photo-realistic face'''' at ted.com] Debevec et al. presented new digital likenesses, made by [[w:Image Metrics]], this time of actress [[w:Emily O'Brien]] whose reflectance was captured with the USC light stage 5. At 00:04:59 you can see two clips, one with the real Emily shot with a real camera and one with a digital look-alike of Emily, shot with a simulation of a camera - <u>Which is which is difficult to tell</u>. Bruce Lawmen was scanned using USC light stage 6 in still position and also recorded running there on a [[w:treadmill]]. Many, many digital look-alikes of Bruce are seen running fluently and natural looking at the ending sequence of the TED talk video. <ref name="Deb2009">[http://www.ted.com/talks/paul_debevec_animates_a_photo_real_digital_face.html In this TED talk video] at 00:04:59 you can see ''two clips, one with the real Emily shot with a real camera and one with a digital look-alike of Emily, shot with a simulation of a camera - <u>Which is which is difficult to tell</u>''. Bruce Lawmen was scanned using USC light stage 6 in still position and also recorded running there on a [[w:treadmill]]. Many, many digital look-alikes of Bruce are seen running fluently and natural looking at the ending sequence of the TED talk video.</ref> Motion looks fairly convincing contrasted to the clunky run in the [[w:Animatrix#Final Flight of the Osiris|''Animatrix: Final Flight of the Osiris'']] which was [[w:state-of-the-art]] in 2003 if photorealism was the intention of the [[w:animators]]. | |||

* '''2004''' | movie | The '''[[w:Spider-man 2]]''' (and '''[[w:Spider-man 3]]''', 2007) films. Relevancy: The films include a [[Synthetic human-like fakes#Digital look-alike|digital look-alike]] made of actor [[w:Tobey Maguire]] by [[w:Sony Pictures Imageworks]].<ref name="Pig2005">{{cite web | |||

| last = Pighin | |||

| first = Frédéric | |||

| author-link = | |||

| title = Siggraph 2005 Digital Face Cloning Course Notes | |||

| website = | |||

| date = | |||

| url = http://pages.cs.wisc.edu/~lizhang/sig-course-05-face/COURSE_9_DigitalFaceCloning.pdf | |||

| format = | |||

| doi = | |||

| accessdate = 2020-06-26}} | |||

</ref> | |||

* '''2003''' | short film | [[w:The Animatrix#Final Flight of the Osiris|''The Animatrix: Final Flight of the Osiris'']] a [[w:state-of-the-art]] want-to-be human likenesses not quite fooling the watcher made by [[w:Square Pictures#Square Pictures|Square Pictures]]. | |||

* '''2003''' | movie(s) | The '''[[w:Matrix Reloaded]]''' and '''[[w:Matrix Revolutions]]''' films. Relevancy: '''First public display''' of '''[[synthetic human-like fakes#Digital look-alikes|digital look-alikes]]''' that are virtually '''indistinguishable from''' the '''real actors'''. | |||

* '''2002''' | music video | '''[https://www.youtube.com/watch?v=3qIXIHAmcKU 'Bullet' by Covenant on Youtube]''' by [[w:Covenant (band)]] from their album [[w:Northern Light (Covenant album)]]. Relevancy: Contains the best upper-torso digital look-alike of Eskil Simonsson (vocalist) that their organization could procure at the time. Here you can observe the '''classic "''skin looks like cardboard''"-bug''' (assuming this was not intended) that '''thwarted efforts to''' make digital look-alikes that '''pass human testing''' before the '''reflectance capture and dissection in 1999''' by [[w:Paul Debevec]] et al. at the [[w:University of Southern California]] and subsequent development of the '''"Analytical [[w:bidirectional reflectance distribution function|BRDF]]"''' (quote-unquote) by ESC Entertainment, a company set up for the '''sole purpose''' of '''making the cinematography''' for the 2003 films Matrix Reloaded and Matrix Revolutions '''possible''', lead by George Borshukov. | |||

[[File: | === 1990's synthetic human-like fakes === | ||

[[File:BSSDF01_400.svg|thumb|left|300px|Traditional [[w:Bidirectional reflectance distribution function|BRDF]] vs. [[w:subsurface scattering|subsurface scattering]] inclusive BSSRDF i.e. [[w:Bidirectional scattering distribution function#Overview of the BxDF functions|Bidirectional scattering-surface reflectance distribution function]]. <br/><br/> | |||

An analytical BRDF must take into account the subsurface scattering, or the end result '''will not pass human testing'''.]] | |||

* ''' | * <font color="red">'''1999'''</font> | <font color="red">'''science'''</font> | '''[http://dl.acm.org/citation.cfm?id=344855 'Acquiring the reflectance field of a human face' paper at dl.acm.org ]''' [[w:Paul Debevec]] et al. of [[w:University of Southern California|USC]] did the '''first known reflectance capture''' over '''the human face''' with their extremely simple [[w:light stage]]. They presented their method and results in [[w:SIGGRAPH]] 2000. The scientific breakthrough required finding the [[w:subsurface scattering|w:subsurface light component]] (the simulation models are glowing from within slightly) which can be found using knowledge that light that is reflected from the oil-to-air layer retains its [[w:Polarization (waves)]] and the subsurface light loses its polarization. So equipped only with a movable light source, movable video camera, 2 polarizers and a computer program doing extremely simple math and the last piece required to reach photorealism was acquired.<ref name="Deb2000"/> | ||

* ''' | * <font color="red">'''1999'''</font> | <font color="red">'''institute founded'''</font> | The '''[[w:Institute for Creative Technologies]]''' was founded by the [[w:United States Army]] in the [[w:University of Southern California]]. It collaborates with the [[w:United States Army Futures Command]], [[w:United States Army Combat Capabilities Development Command]], [[w:Combat Capabilities Development Command Soldier Center]], [[w:United States Army Research Laboratory]] and [[w:United States Army Research Laboratory]].<ref name="ICT-about">https://ict.usc.edu/about/</ref> | ||

* '''1994''' | movie | [[w:The Crow (1994 film)|The Crow]] was the first film production to make use of [[w:digital compositing]] of a computer simulated representation of a face onto scenes filmed using a [[w:body double]]. Necessity was the muse as the actor [[w:Brandon Lee]] portraying the protagonist was tragically killed accidentally on-stage. | |||

=== 1970's synthetic human-like fakes === | |||

* '''1976''' | movie | ''[[w:Futureworld]]'' reused parts of ''A Computer Animated Hand'' on the big screen. | |||

* '''1972''' | entertainment | '''[https://vimeo.com/59434349 'A Computer Animated Hand' on Vimeo]'''. [[w:A Computer Animated Hand]] by [[w:Edwin Catmull]] and [[w:Fred Parke]]. Relevancy: This was the '''first time''' that [[w:computer-generated imagery|computer-generated imagery]] was used in film to '''animate''' moving '''human-like appearance'''. | |||

* ''' | |||

* '''1971''' | science | '''[https://interstices.info/images-de-synthese-palme-de-la-longevite-pour-lombrage-de-gouraud/ 'Images de synthèse : palme de la longévité pour l’ombrage de Gouraud' (still photos)]'''. [[w:Henri Gouraud (computer scientist)]] made the first [[w:Computer graphics]] [[w:geometry]] [[w:digitization]] and representation of a human face. Modeling was his wife Sylvie Gouraud. The 3D model was a simple [[w:wire-frame model]] and he applied [[w:Gouraud shading]] to produce the '''first known representation''' of '''human-likeness''' on computer. <ref>{{cite web|title=Images de synthèse : palme de la longévité pour l'ombrage de Gouraud|url=http://interstices.info/jcms/c_25256/images-de-synthese-palme-de-la-longevite-pour-lombrage-de-gouraud}}</ref> | |||

=== 1770's synthetic human-like fakes === | |||

[[File:Kempelen Speakingmachine.JPG|right|thumb|300px|A replica of [[w:Wolfgang von Kempelen|Kempelen]]'s [[w:Wolfgang von Kempelen's Speaking Machine|speaking machine]], built 2007–09 at the Department of [[w:Phonetics|Phonetics]], [[w:Saarland University|Saarland University]], [[w:Saarbrücken|Saarbrücken]], Germany. This machine added models of the tongue and lips, enabling it to produce [[w:consonant|consonant]]s as well as [[w:vowel|vowel]]s]] | |||

</ref> | * '''1791''' | science | '''[[w:Wolfgang von Kempelen's Speaking Machine]]''' of [[w:Wolfgang von Kempelen]] of [[w:Pressburg]], [[w:Hungary]], described in a 1791 paper was [[w:bellows]]-operated.<ref>''Mechanismus der menschlichen Sprache nebst der Beschreibung seiner sprechenden Maschine'' ("Mechanism of the human speech with description of its speaking machine", J. B. Degen, Wien).</ref> This machine added models of the tongue and lips, enabling it to produce [[w:consonant]]s as well as [[w:vowel]]s. (based on [[w:Speech synthesis#History]]) | ||

* '''1779''' | science / discovery | [[w:Christian Gottlieb Kratzenstein]] won the first prize in a competition announced by the [[w:Russian Academy of Sciences]] for '''models''' he built of the '''human [[w:vocal tract]]''' that could produce the five long '''[[w:vowel]]''' sounds.<ref name="Helsinki"> | |||

[http://www.acoustics.hut.fi/publications/files/theses/lemmetty_mst/chap2.html History and Development of Speech Synthesis], Helsinki University of Technology, Retrieved on November 4, 2006 | |||

</ref> (Based on [[w:Speech synthesis#History]]) | |||

---- | ---- | ||

Revision as of 18:04, 24 July 2020

When the camera does not exist, but the subject being imaged with a simulation of a (movie) camera deceives the watcher to believe it is some living or dead person it is a digital look-alike.

When it cannot be determined by human testing whether some fake voice is a synthetic fake of some person's voice, or is it an actual recording made of that person's actual real voice, it is a digital sound-alike.

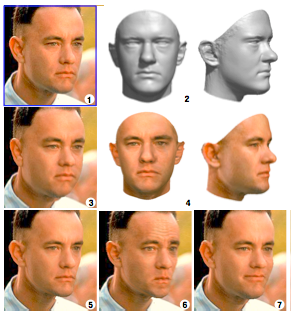

(1) Sculpting a morphable model to one single picture

(2) Produces 3D approximation

(4) Texture capture

(3) The 3D model is rendered back to the image with weight gain

(5) With weight loss

(6) Looking annoyed

(7) Forced to smile Image 2 by Blanz and Vettel – Copyright ACM 1999 – http://dl.acm.org/citation.cfm?doid=311535.311556 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

'Saint John on Patmos' pictures w:John of Patmos on w:Patmos writing down the visions to make the w:Book of Revelation. Picture from folio 17 of the w:Très Riches Heures du Duc de Berry (1412-1416) by the w:Limbourg brothers. Currently located at the Musée Condé 40km north of Paris, France.

Digital look-alikes

Introduction to digital look-alikes

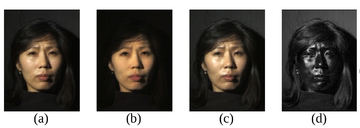

(a) Normal image in dot lighting

(b) Image of the diffuse reflection which is caught by placing a vertical polarizer in front of the light source and a horizontal in the front the camera

(c) Image of the highlight specular reflection which is caught by placing both polarizers vertically

(d) Subtraction of c from b, which yields the specular component

Images are scaled to seem to be the same luminosity.

Original image by Debevec et al. – Copyright ACM 2000 – https://dl.acm.org/citation.cfm?doid=311779.344855 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

Original picture by Debevec et al. - Copyright ACM 2000 https://dl.acm.org/citation.cfm?doid=311779.344855

In the cinemas we have seen digital look-alikes for over 15 years. These digital look-alikes have "clothing" (a simulation of clothing is not clothing) or "superhero costumes" and "superbaddie costumes", and they don't need to care about the laws of physics, let alone laws of physiology. It is generally accepted that digital look-alikes made their public debut in the sequels of The Matrix i.e. w:The Matrix Reloaded and w:The Matrix Revolutions released in 2003. It can be considered almost certain, that it was not possible to make these before the year 1999, as the final piece of the puzzle to make a (still) digital look-alike that passes human testing, the reflectance capture over the human face, was made for the first time in 1999 at the w:University of Southern California and was presented to the crème de la crème of the computer graphics field in their annual gathering SIGGRAPH 2000.[1]

“Do you think that was Hugo Weaving's left cheekbone that Keanu Reeves punched in with his right fist?”

The problems with digital look-alikes

Extremely unfortunately for the humankind, organized criminal leagues, that posses the weapons capability of making believable looking synthetic pornography, are producing on industrial production pipelines synthetic terror porn[footnote 1] by animating digital look-alikes and distributing it in the murky Internet in exchange for money stacks that are getting thinner and thinner as time goes by.

These industrially produced pornographic delusions are causing great humane suffering, especially in their direct victims, but they are also tearing our communities and societies apart, sowing blind rage, perceptions of deepening chaos, feelings of powerlessness and provoke violence. This hate illustration increases and strengthens hate thinking, hate speech, hate crimes and tears our fragile social constructions apart and with time perverts humankind's view of humankind into an almost unrecognizable shape, unless we interfere with resolve.

For these reasons the bannable raw materials i.e. covert models, needed to produce this disinformation terror on the information-industrial production pipelines, should be prohibited by law in order to protect humans from arbitrary abuse by criminal parties.

List of possible naked digital look-alike attacks

- The classic "portrayal of as if in involuntary sex"-attack. (Digital look-alike "cries")

- "Sexual preference alteration"-attack. (Digital look-alike "smiles")

- "Cutting / beating"-attack (Constructs a deceptive history for genuine scars)

- "Mutilation"-attack (Digital look-alike "dies")

- "Unconscious and injected"-attack (Digital look-alike gets "disease")

Digital sound-alikes

Living people can defend[footnote 2] themselves against digital sound-alike by denying the things the digital sound-alike says if they are presented to the target, but dead people cannot. Digital sound-alikes offer criminals new disinformation attack vectors and wreak havoc on provability.

'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis' 2018 by Google Research (external transclusion)

- In the 2018 at the Conference on Neural Information Processing Systems (NeurIPS) the work 'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis' (at arXiv.org) was presented. The pre-trained model is able to steal voices from a sample of only 5 seconds with almost convincing results

The Iframe below is transcluded from 'Audio samples from "Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis"' at google.gituhub.io, the audio samples of a sound-like-anyone machine presented as at the 2018 w:NeurIPS conference by Google researchers.

Observe how good the "VCTK p240" system is at deceiving to think that it is a person that is doing the talking.

Example of a hypothetical 4-victim digital sound-alike attack

A very simple example of a digital sound-alike attack is as follows:

Someone puts a digital sound-alike to call somebody's voicemail from an unknown number and to speak for example illegal threats. In this example there are at least two victims:

- Victim #1 - The person whose voice has been stolen into a covert model and a digital sound-alike made from it to frame them for crimes

- Victim #2 - The person to whom the illegal threat is presented in a recorded form by a digital sound-alike that deceptively sounds like victim #1

- Victim #3 - It could also be viewed that victim #3 is our law enforcement systems as they are put to chase after and interrogate the innocent victim #1

- Victim #4 - Our judiciary which prosecutes and possibly convicts the innocent victim #1.

Thus it is high time to act and to criminalize the covert modeling of human appearance and voice!

Examples of speech synthesis software not quite able to fool a human yet

Some other contenders to create digital sound-alikes are though, as of 2019, their speech synthesis in most use scenarios does not yet fool a human because the results contain tell tale signs that give it away as a speech synthesizer.

- Lyrebird.ai (listen)

- CandyVoice.com (test with your choice of text)

- Merlin, a w:neural network based speech synthesis system by the Centre for Speech Technology Research at the w:University of Edinburgh

- 'Neural Voice Cloning with a Few Samples at papers.nips.cc, w:Baidu Research'es shot at sound-like-anyone-machine did not convince in 2018

Reporting on the sound-like-anyone-machines

- "Artificial Intelligence Can Now Copy Your Voice: What Does That Mean For Humans?" May 2019 reporting at forbes.com on w:Baidu Research'es attempt at the sound-like-anyone-machine demonstrated at the 2018 w:NeurIPS conference.

Documented digital sound-alike attacks

- Sound like anyone technology found its way to the hands of criminals as in 2019 Symantec researchers knew of 3 cases where technology has been used for crime

- "Fake voices 'help cyber-crooks steal cash'" at bbc.com July 2019 reporting [2]

- "An artificial-intelligence first: Voice-mimicking software reportedly used in a major theft" at washingtonpost.com documents a w:fraud committed with digital sound-like-anyone-machine, July 2019 reporting.[3]

The below video 'This AI Clones Your Voice After Listening for 5 Seconds' by '2 minute papers' describes the voice thieving machine presented by Google Research in NeurIPS 2018.

Text synthesis

w:Chatbots have existed for a longer time, but only now armed with AI they are becoming more deceiving.

In w:natural language processing development in w:natural-language understanding leads to more cunning w:natural-language generation AI.

w:OpenAI's Generative Pre-trained Transformer (GPT) is a left-to-right transformer-based text generation model succeeded by GPT-2 and GPT-3

Reporting / announcements

- 'OpenAI’s latest AI text generator GPT-3 amazes early adopters' at siliconangle.com July 2020 reporting on GPT-3

- OpenAI releases the full version of GPT-2 at openai.com in August 2019

- 'OpenAI releases curtailed version of GPT-2 language model' at venturebeat.com, August 2019 reporting on the original release of of the curtailed version of GPT-2

External links

- "Detection of Fake and False News (Text Analysis): Approaches and CNN as Deep Learning Model" at analyticsteps.com, a 2019 summmary written by Shubham Panth.

Countermeasures against synthetic human-like fakes

Organizations against synthetic human-like fakes

- w:DARPA DARPA program: 'Media Forensics (MediFor)' at darpa.mil aims to develop technologies for the automated assessment of the integrity of an image or video and integrating these in an end-to-end media forensics platform. Archive.org first crawled their homepage in June 2016[4].

- DARPA DARPA program: 'Semantic Forensics (SemaFor) at darpa.mil aims to counter synthetic disinformation by developing systems for detecting semantic inconsistencies in forged media. They state that they hope to create technologies that "will help identify, deter, and understand adversary disinformation campaigns". More information at w:Duke University's Research Funding database: Semantic Forensics (SemaFor) at researchfunding.duke.edu and some at Semantic Forensics grant opportunity (closed Nov 2019) at grants.gov. Archive.org first cralwed their website in November 2019[5]

- w:University of Colorado Denver is the home of the National Center for Media Forensics at artsandmedia.ucdenver.edu at the w:University of Colorado Denver offers a Master's degree program, training courses and scientific basic and applied research. Faculty staff at the NCMF

- w:SAG-AFTRA SAG-AFTRA ACTION ALERT: "Support California Bill to End Deepfake Porn" at sagaftra.org endorses California Senate Bill SB 564 introduced to the California State Senate by California Senator Connie Leyva in Feb 2019.

Events against synthetic human-like fakes

- 2020 | CVPR | 2020 Conference on Computer Vision and Pattern Recognition: 'Workshop on Media Forensics' at sites.google.com, a June 2020 workshop at the w:Conference on Computer Vision and Pattern Recognition.

- 2019 | NeurIPS | Facebook, Inc. "Facebook AI Launches Its Deepfake Detection Challenge" at spectrum.ieee.org

- 2019 | CVPR | 2019 CVPR: 'Workshop on Media Forensics'

- Annual (?) | w:National Institute of Standards and Technology (NIST) | NIST: 'Media Forensics Challenge' at nist.gov, an iterative research challenge by the w:National Institute of Standards and Technology with the ongoing challenge being the 2nd one in action. the evaluation criteria for the 2019 iteration are being formed

- 2018 | ECCV ECCV 2018: 'Workshop on Objectionable Content and Misinformation' at sites.google.com, a workshop at the 2018 w:European Conference on Computer Vision

Studies against synthetic human-like fakes

- 'Media Forensics and DeepFakes: an overview' at arXiv.org (as .pdf at arXiv.org), a 2020 review on the subject of digital look-alikes and media forensics

- 'DEEPFAKES: False pornography is here and the law cannot protect you' at scholarship.law.duke.edu by Douglas Harris, published in Duke Law & Technology Review - Volume 17 on 2019-01-05 by Duke University School of Law

Search for more

Companies against synthetic human-like fakes

- Cyabra.com is an AI-based system that helps organizations be on the guard against disinformation attacks[1st seen in 1]. Reuters.com reporting from July 2020.

SSF! wiki proposed countermeasure to synthetic porn: Adequate Porn Watcher AI (transcluded)

Transcluded from Adequate Porn Watcher AI

Adequate Porn Watcher AI (APW_AI) is an w:AI and w:computer vision concept to search for any and all porn that should not be by watching and modeling all porn ever found on the w:Internet thus effectively protecting humans by exposing covert naked digital look-alike attacks and also other contraband.

Obs. #A service identical to APW_AI used to exist - FacePinPoint.com

The method and the effect

The method by which APW_AI would be providing safety and security to its users, is that they can briefly upload a model they've gotten of themselves and then the APW_AI will either say nothing matching found or it will be of the opinion that something matching found.

If people are able to check whether there is synthetic porn that looks like themselves, this causes synthetic hate-illustration industrialists' product lose destructive potential and the attacks that happen are less destructive as they are exposed by the APW_AI and thus decimate the monetary value of these disinformation weapons to the criminals.

If you feel comfortable to leave your model with the good people at the benefactor for safekeeping you get alerted and help if you ever get attacked with a synthetic porn attack.

Rules

Looking up if matches are found for anyone else's model is forbidden and this should probably be enforced with a facial w:biometric w:facial recognition system app that checks that the model you want checked is yours and that you are awake.

Definition of adequacy

An adequate implementation should be nearly free of false positives, very good at finding true positives and able to process more porn than is ever uploaded.

What about the people in the porn-industry?

People who openly do porn can help by opting-in to help in the development by providing training material and material to test the AI on. People and companies who help in training the AI naturally get credited for their help.

There are of course lots of people-questions to this and those questions need to be identified by professionals of psychology and social sciences.

History

The idea of APW_AI occurred to User:Juho Kunsola on Friday 2019-07-12. Subsequently (the next day) this discovery caused the scrapping of the plea to ban convert modeling of human appearance as that would have rendered APW_AI legally impossible.

Countermeasures elsewhere

Partial transclusion from Organizations, studies and events against synthetic human-like fakes

Companies against synthetic filth

- Alecto AI at alectoai.com[1st seen in 2], a provider of an AI-based face information analytics, founded in 2021 in Palo Alto.

- Facenition.com, an NZ company founded in 2019 and ingenious method to hunt for the fake human-like images. Probably has been purchased, mergered or licensed to ThatsMyFace.com

- ThatsMyFace.com[1st seen in 2], an Australian company.[contacted 1] Previously, another company in the USA had this same name and domain name.[6]

A service identical to APW_AI used to exist - FacePinPoint.com

Partial transclusion from FacePinPoint.com

FacePinPoint.com was a for-a-fee service from 2017 to 2021 for pointing out where in pornography sites a particular face appears, or in the case of synthetic pornography, a digital look-alike makes make-believe of a face or body appearing.[contacted 2]The inventor and founder of FacePinPoint.com, Mr. Lionel Hagege registered the domain name in 2015[7], when he set out to research the feasibility of his action plan idea against non-consensual pornography.[8] The description of how FacePinPoint.com worked is the same as Adequate Porn Watcher AI (concept)'s description.

Possible legal response: Outlawing digital sound-alikes (transcluded)

Transcluded from Juho's proposal on banning digital sound-alikes

Timeline of synthetic human-like fakes

2020's synthetic human-like fakes

- 2020 | demonstration | In Event of Moon Disaster - FULL FILM at youtube.com by the moondisaster.org project by the Center for Advanced Virtuality of the MIT makes a synthetic human-like fake in the appearance and almost in the sound of Nixon.

- 2020 | US state law | January 1 [9] the w:California state law AB-602 came into effect banning the manufacturing and distribution of synthetic pornography without the w:consent of the people depicted. AB-602 provides victims of synthetic pornography with injunctive relief and poses legal threats of statutory and w:punitive damages on w:criminals making or distributing synthetic pornography without consent. The bill AB-602 was signed into law by California Governor w:Gavin Newsom on October 3 2019 and was authored by w:California State Assembly member w:Marc Berman.[10]

- 2020 | Chinese legislation | On January 1 Chinese law requiring that synthetically faked footage should bear a clear notice about its fakeness came into effect. Failure to comply could be considered a w:crime the w:Cyberspace Administration of China stated on its website. China announced this new law in November 2019.[11] The Chinese government seems to be reserving the right to prosecute both users and w:online video platforms failing to abide by the rules. [12]

2010's synthetic human-like fakes

- 2019 | demonstration | In September 2019 w:Yle, the Finnish w:public broadcasting company, aired a result of experimental w:journalism, a deepfake of the President in office w:Sauli Niinistö in its main news broadcast for the purpose of highlighting the advancing disinformation technology and problems that arise from it.

- 2019 | US state law | Since September 1 w:Texas senate bill SB 751 w:amendments to the election code came into effect, giving w:candidates in w:elections a 30-day protection period to the elections during which making and distributing digital look-alikes or synthetic fakes of the candidates is an offense. The law text defines the subject of the law as "a video, created with the intent to deceive, that appears to depict a real person performing an action that did not occur in reality"[13]

- 2019 | US state law | Since July 1 [14] w:Virginia has criminalized the sale and dissemination of unauthorized synthetic pornography, but not the manufacture.[15], as § 18.2-386.2 titled 'Unlawful dissemination or sale of images of another; penalty.' became part of the w:Code of Virginia. The law text states: "Any person who, with the intent to coerce, harass, or intimidate, maliciously disseminates or sells any videographic or still image created by any means whatsoever that depicts another person who is totally [w:[nudity|nude]], or in a state of undress so as to expose the genitals, pubic area, w:buttocks, or female w:breast, where such person knows or has reason to know that he is not w:licensed or authorized to disseminate or sell such videographic or still image is guilty of a Class 1 misdemeanor.".[15] The identical bills were House Bill 2678 presented by Delegate w:Marcus Simon to the w:Virginia House of Delegates on January 14 2019 and three day later an identical Senate bill 1736 was introduced to the w:Senate of Virginia by Senator w:Adam Ebbin.

- 2019 | science and demonstration | 'Speech2Face: Learning the Face Behind a Voice' at arXiv.org a system for generating likely facial features based on the voice of a person, presented by the w:MIT Computer Science and Artificial Intelligence Laboratory at the 2019 CVPR. Speech2Face at github.com This may develop to something that really causes problems. "Speech2Face: Neural Network Predicts the Face Behind a Voice" reporing at neurohive.io, "Speech2Face Sees Voices and Hears Faces: Dreams Come True with AI" reporting at belitsoft.com

- 2019 | crime | 'An artificial-intelligence first: Voice-mimicking software reportedly used in a major theft', a 2019 Washington Post article

- 2019 | demonstration | 'Which Face is real?' at whichfaceisreal.com is an easily unnerving game by Carl Bergstrom and Jevin West where you need to try to distinguish from a pair of photos which is real and which is not. A part of the "tools" of the Calling Bullshit course taught at the w:University of Washington. Relevancy: certain

- 2019 | demonstration | 'Thispersondoesnotexist.com' (since February 2019) by Philip Wang. It showcases a w:StyleGAN at the task of making an endless stream of pictures that look like no-one in particular, but are eerily human-like. Relevancy: certain

- 2019 | action | w:Nvidia w:open sources w:StyleGAN, a novel w:generative adversarial network.[16]

- 2018 | counter-measure | In September 2018 Google added “involuntary synthetic pornographic imagery” to its ban list, allowing anyone to request the search engine block results that falsely depict them as “nude or in a sexually explicit situation.”[17] Information on removing involuntary fake pornography from Google at support.google.com if it shows up in Google and the form to request removing involuntary fake pornography at support.google.com, select "I want to remove: A fake nude or sexually explicit picture or video of myself"

- 2018 | science and demonstration | The work 'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis' (at arXiv.org) was presented at the 2018 w:Conference on Neural Information Processing Systems (NeurIPS). The pre-trained model is able to steal voices from a sample of only 5 seconds with almost convincing results.

- 2018 | demonstration | At the 2018 w:World Internet Conference in w:Wuzhen the w:Xinhua News Agency presented two digital look-alikes made to the resemblance of its real news anchors Qiu Hao (w:Chinese language)[18] and Zhang Zhao (w:English language). The digital look-alikes were made in conjunction with w:Sogou.[19] Neither the w:speech synthesis used nor the gesturing of the digital look-alike anchors were good enough to deceive the watcher to mistake them for real humans imaged with a TV camera.

- 2018 | controversy / demonstration | The w:deepfakes controversy surfaces where porn videos were doctored utilizing deep machine learning so that the face of the actress was replaced by the software's opinion of what another persons face would look like in the same pose and lighting.

- 2017 | science | 'Synthesizing Obama: Learning Lip Sync from Audio' at grail.cs.washington.edu. In SIGGRAPH 2017 by Supasorn Suwajanakorn et al. of the w:University of Washington presented an audio driven digital look-alike of upper torso of Barack Obama. It was driven only by a voice track as source data for the animation after the training phase to acquire w:lip sync and wider facial information from w:training material consisting 2D videos with audio had been completed.[20] Relevancy: certain

- 2016 | movie | w:Rogue One is a Star Wars film for which digital look-alikes of actors w:Peter Cushing and w:Carrie Fisher were made. In the film their appearance would appear to be of same age as the actors were during the filming of the original 1977 w:Star Wars (film) film.

- 2016 | science / demonstration | w:DeepMind's w:WaveNet owned by w:Google also demonstrated ability to steal people's voices

- 2016 | science and demonstration | w:Adobe Inc. publicly demonstrates w:Adobe Voco, a sound-like-anyone machine '#VoCo. Adobe Audio Manipulator Sneak Peak with Jordan Peele | Adobe Creative Cloud' on Youtube. THe original Adobe Voco required 20 minutes of sample to thieve a voice. Relevancy: certain.

- 2016 | science | 'Face2Face: Real-time Face Capture and Reenactment of RGB Videos' at Niessnerlab.org A paper (with videos) on the semi-real-time 2D video manipulation with gesture forcing and lip sync forcing synthesis by Thies et al, Stanford. Relevancy: certain

- 2015 | movie | In the w:Furious 7 a digital look-alike made of the actor w:Paul Walker who died in an accident during the filming was done by w:Weta Digital to enable the completion of the film.[21]

- 2014 | science | w:Ian Goodfellow et al. presented the principles of a w:generative adversarial network. GANs made the headlines in early 2018 with the w:deepfakes controversies.

- 2013 | demonstration | At the 2013 SIGGGRAPH w:Activision and USC presented a real time "Digital Ira" a digital face look-alike of Ari Shapiro, an ICT USC research scientist,[22] utilizing the USC light stage X by Ghosh et al. for both reflectance field and motion capture.[23] The end result both precomputed and real-time rendering with the modernest game GPU shown here and looks fairly realistic.

- 2013 | demonstration | A 'Scanning and Printing a 3D Portrait of President Barack Obama' at ict.usc.edu. A 7D model and a 3D bust was made of President Obama with his consent. Relevancy: Relevancy: certain

2000's synthetic human-like fakes

- 2010 | movie | w:Walt Disney Pictures released a sci-fi sequel entitled w:Tron: Legacy with a digitally rejuvenated digital look-alike made of the actor w:Jeff Bridges playing the w:antagonist CLU.

- 2009 | movie | A digital look-alike of a younger w:Arnold Schwarzenegger was made for the movie w:Terminator Salvation though the end result was critiqued as unconvincing. Facial geometry was acquired from a 1984 mold of Schwarzenegger.

- 2009 | demonstration | Paul Debevec: 'Animating a photo-realistic face' at ted.com Debevec et al. presented new digital likenesses, made by w:Image Metrics, this time of actress w:Emily O'Brien whose reflectance was captured with the USC light stage 5. At 00:04:59 you can see two clips, one with the real Emily shot with a real camera and one with a digital look-alike of Emily, shot with a simulation of a camera - Which is which is difficult to tell. Bruce Lawmen was scanned using USC light stage 6 in still position and also recorded running there on a w:treadmill. Many, many digital look-alikes of Bruce are seen running fluently and natural looking at the ending sequence of the TED talk video. [24] Motion looks fairly convincing contrasted to the clunky run in the Animatrix: Final Flight of the Osiris which was w:state-of-the-art in 2003 if photorealism was the intention of the w:animators.

- 2004 | movie | The w:Spider-man 2 (and w:Spider-man 3, 2007) films. Relevancy: The films include a digital look-alike made of actor w:Tobey Maguire by w:Sony Pictures Imageworks.[25]

- 2003 | short film | The Animatrix: Final Flight of the Osiris a w:state-of-the-art want-to-be human likenesses not quite fooling the watcher made by Square Pictures.

- 2003 | movie(s) | The w:Matrix Reloaded and w:Matrix Revolutions films. Relevancy: First public display of digital look-alikes that are virtually indistinguishable from the real actors.

- 2002 | music video | 'Bullet' by Covenant on Youtube by w:Covenant (band) from their album w:Northern Light (Covenant album). Relevancy: Contains the best upper-torso digital look-alike of Eskil Simonsson (vocalist) that their organization could procure at the time. Here you can observe the classic "skin looks like cardboard"-bug (assuming this was not intended) that thwarted efforts to make digital look-alikes that pass human testing before the reflectance capture and dissection in 1999 by w:Paul Debevec et al. at the w:University of Southern California and subsequent development of the "Analytical BRDF" (quote-unquote) by ESC Entertainment, a company set up for the sole purpose of making the cinematography for the 2003 films Matrix Reloaded and Matrix Revolutions possible, lead by George Borshukov.

1990's synthetic human-like fakes

An analytical BRDF must take into account the subsurface scattering, or the end result will not pass human testing.

- 1999 | science | 'Acquiring the reflectance field of a human face' paper at dl.acm.org w:Paul Debevec et al. of USC did the first known reflectance capture over the human face with their extremely simple w:light stage. They presented their method and results in w:SIGGRAPH 2000. The scientific breakthrough required finding the w:subsurface light component (the simulation models are glowing from within slightly) which can be found using knowledge that light that is reflected from the oil-to-air layer retains its w:Polarization (waves) and the subsurface light loses its polarization. So equipped only with a movable light source, movable video camera, 2 polarizers and a computer program doing extremely simple math and the last piece required to reach photorealism was acquired.[1]

- 1999 | institute founded | The w:Institute for Creative Technologies was founded by the w:United States Army in the w:University of Southern California. It collaborates with the w:United States Army Futures Command, w:United States Army Combat Capabilities Development Command, w:Combat Capabilities Development Command Soldier Center, w:United States Army Research Laboratory and w:United States Army Research Laboratory.[26]

- 1994 | movie | The Crow was the first film production to make use of w:digital compositing of a computer simulated representation of a face onto scenes filmed using a w:body double. Necessity was the muse as the actor w:Brandon Lee portraying the protagonist was tragically killed accidentally on-stage.

1970's synthetic human-like fakes

- 1976 | movie | w:Futureworld reused parts of A Computer Animated Hand on the big screen.

- 1972 | entertainment | 'A Computer Animated Hand' on Vimeo. w:A Computer Animated Hand by w:Edwin Catmull and w:Fred Parke. Relevancy: This was the first time that computer-generated imagery was used in film to animate moving human-like appearance.

- 1971 | science | 'Images de synthèse : palme de la longévité pour l’ombrage de Gouraud' (still photos). w:Henri Gouraud (computer scientist) made the first w:Computer graphics w:geometry w:digitization and representation of a human face. Modeling was his wife Sylvie Gouraud. The 3D model was a simple w:wire-frame model and he applied w:Gouraud shading to produce the first known representation of human-likeness on computer. [27]

1770's synthetic human-like fakes

- 1791 | science | w:Wolfgang von Kempelen's Speaking Machine of w:Wolfgang von Kempelen of w:Pressburg, w:Hungary, described in a 1791 paper was w:bellows-operated.[28] This machine added models of the tongue and lips, enabling it to produce w:consonants as well as w:vowels. (based on w:Speech synthesis#History)

- 1779 | science / discovery | w:Christian Gottlieb Kratzenstein won the first prize in a competition announced by the w:Russian Academy of Sciences for models he built of the human w:vocal tract that could produce the five long w:vowel sounds.[29] (Based on w:Speech synthesis#History)

Media perhaps about synthetic human-like fakes

This is a chronological listing of media that are probably to do with synthetic human-like fakes.

The links currently include scripture, science, demonstrations, music videos, music, entertainment and movies.

6th century BC

Daniel 7, Daniel's vision of the three beasts Dan 7:1-6 and the fourth beast Dan 7:7-8 from the sea and the Ancient of DaysDan 7:9-10

- w:6th century BC | scripture | w:Daniel (biblical figure) was in w:Babylonian captivity when he had his visions where God warned us of synthetic human-like fakes first.

- His testimony was put into written form in the #3rd century BC.

3rd century BC

- w:3rd century BC | scripture | The w:Book of Daniel was put in writing.

- See Biblical explanation - The books of Daniel and Revelation § Daniel 7. Caution to reader: contains explicit written information about the beasts.

1st century

- w:1st century | scripture | w:Jesus teaches about things that are yet to come in

- 1st century | scripture | w:2 Thessalonians 2 is the second chapter of the w:Second Epistle to the Thessalonians. It is traditionally attributed to w:Paul the Apostle, with w:Saint Timothy as a co-author. See Biblical explanation - The books of Daniel and Revelation § 2 Thessalonians 2 Caution to reader: contains explicit written information about the beasts

- 1st century | scripture | w:Book of Revelation. The task of writing down and smuggling out this early warning of what is to come is given by God to his servant John, who was imprisoned on the island of w:Patmos. See Biblical explanation - The books of Daniel and Revelation § Revelation 13. Caution to reader: contains explicit written information about the beasts.

1980's

- 1983 | music video | 'Musique Non-Stop' by Kraftwerk on Youtube made in 1983, but published only in in 1986 by w:Kraftwerk from album w:Electric Café. Relevancy: Contains state-of-the-art (for the era) digital look-alikes of the band members.

- 1986 | music video | 'Paranoimia' by Art of Noise on Youtube. w:Paranoimia by w:Art of Noise featuring Max Headroom from the album w:In Visible Silence Relevancy: Contains state-of-the-art (for the era) synthetic human-like character, w:Max Headroom).

1990's

- 1998 | music video | 'Rabbit in Your Headlights' by UNKLE on Youtube by w:Unkle and featuring w:Thom Yorke on the lyrics. Wikipedia on 'Rabbit in Your Headlights' music video. Relevancy: Contains shots that would have injured / killed a human actor.

- 1998 | music | 'New Model No. 15' by Marilyn Manson (lyrics in video) on Youtube by w:Marilyn Manson (band) from the album w:Mechanical Animals. Relevancy: The lyrics are obviously about digital look-alikes approaching.

- 1998 | music video | 'The Dope Show' by Marilyn Manson (lyric video) on Youtube (official music video) by w:Marilyn Manson (band) from the album w:Mechanical Animals. Relevancy: lyrics

2000's

- 2001 | music video | 'Evolution Revolution Love' by Tricky on Youtube by w:Tricky (musician) from the w:Blowback (album) and featuring w:Ed Kowalczyk. Relevancy: See video

- 2001 | music video | 'Plug In Baby' by Muse by w:Muse (band) from their album w:Origin of Symmetry. Relevancy: See video

- 2005 | music video | Nine Inch Nails music video for 'Only' at Youtube.com w:Only (Nine Inch Nails song) by the w:Nine Inch Nails. Relevancy: check the lyrics, check the video

- 2006 | music video | 'John The Revelator' by Depeche Mode (official music video) on Youtube by w:Depeche Mode from the single w:John the Revelator / Lilian. Relevancy: Book of Revelations.

2010's

- 2013 | music | 'In Two' by the Nine Inch Nails (lyric video) on Youtube by w:Nine Inch Nails from the album w:Hesitation Marks. Relevancy: The lyrics seem to be about appearance theft.

- 2013 | music | 'Copy of A' by the Nine Inch Nails (lyric video) on Youtube by w:Nine Inch Nails from the album w:Hesitation Marks. Relevancy: The lyrics seem to be about appearance theft.

- 2013 | music video | 'Before Your Very Eyes' by Atoms For Peace (official music video) on Youtube by w:Atoms for Peace (band) from their album w:Amok (Atoms for Peace album). Relevancy: Watch the video

- 2016 | music video |'Voodoo In My Blood' (official music video) by Massive Attack on Youtube by w:Massive Attack and featuring w:Tricky from the album w:Ritual Spirit. Relevancy: How many machines can you see in the same frame at times? If you answered one, look harder and make a more educated guess.

- 2016 | music video | 'The Spoils' by Massive Attack on Youtube by w:Massive Attack featuring w:Hope Sandoval. Wikipedia on The Spoils (song) Relevancy: The video contains synthesis of human-like likenesses.

- 2018 | music video | 'Simulation Theory' album by Muse on Youtube by w:Muse (band) from the w:Simulation Theory (album). Obs. "The Pause," "Watch What I Do" and "The Interlude" are not part of the album. Relevancy: Whole album

2020's

- 2022 | movie | w:The Matrix 4 (2022) will be the 4th installment of the w:The Matrix (franchise). Relevancy: High likelihood of relevance, but unknown as this film is not yet ready or released.

Footnotes

- ↑ It is terminologically more precise, more inclusive and more useful to talk about 'synthetic terror porn', if we want to talk about things with their real names, than 'synthetic rape porn', because also synthesizing recordings of consentual looking sex scenes can be terroristic in intent.

- ↑ Whether a suspect can defend against faked synthetic speech that sounds like him/her depends on how up-to-date the judiciary is. If no information and instructions about digital sound-alikes have been given to the judiciary, they likely will not believe the defense of denying that the recording is of the suspect's voice.

1st seen in

References

- ↑ 1.0 1.1 Debevec, Paul (2000). "Acquiring the reflectance field of a human face". Proceedings of the 27th annual conference on Computer graphics and interactive techniques - SIGGRAPH '00. ACM. pp. 145–156. doi:10.1145/344779.344855. ISBN 978-1581132083. Retrieved 2020-06-27.

- ↑ "Fake voices 'help cyber-crooks steal cash'". w:bbc.com. w:BBC. 2019-07-08. Retrieved 2020-07-22.

- ↑ Drew, Harwell (2020-04-16). "An artificial-intelligence first: Voice-mimicking software reportedly used in a major theft". w:washingtonpost.com. w:Washington Post. Retrieved 2019-07-22.

- ↑ https://web.archive.org/web/20160630154819/https://www.darpa.mil/program/media-forensics

- ↑ https://web.archive.org/web/20191108090036/https://www.darpa.mil/program/semantic-forensics November

- ↑ https://www.crunchbase.com/organization/thatsmyface-com

- ↑ whois facepinpoint.com

- ↑ https://www.facepinpoint.com/aboutus

- ↑ Johnson, R.J. (2019-12-30). "Here Are the New California Laws Going Into Effect in 2020". KFI. iHeartMedia. Retrieved 2020-07-13.

- ↑ Mihalcik, Carrie (2019-10-04). "California laws seek to crack down on deepfakes in politics and porn". w:cnet.com. w:CNET. Retrieved 2020-07-13.

- ↑ "China seeks to root out fake news and deepfakes with new online content rules". w:Reuters.com. w:Reuters. 2019-11-29. Retrieved 2020-07-13.

- ↑ Statt, Nick (2019-11-29). "China makes it a criminal offense to publish deepfakes or fake news without disclosure". w:The Verge. Retrieved 2020-07-13.

- ↑

"Relating to the creation of a criminal offense for fabricating a deceptive video with intent to influence the outcome of an election". w:Texas. 2019-06-14. Retrieved 2020-07-13.

In this section, "deep fake video" means a video, created with the intent to deceive, that appears to depict a real person performing an action that did not occur in reality

- ↑ "New state laws go into effect July 1".

- ↑ 15.0 15.1 "§ 18.2-386.2. Unlawful dissemination or sale of images of another; penalty". w:Virginia. Retrieved 2020-07-13.

- ↑ "NVIDIA Open-Sources Hyper-Realistic Face Generator StyleGAN". Medium.com. 2019-02-09. Retrieved 2020-07-13.

- ↑

Harwell, Drew (2018-12-30). "Fake-porn videos are being weaponized to harass and humiliate women: 'Everybody is a potential target'". w:The Washington Post. Retrieved 2020-07-13.

In September [of 2018], Google added “involuntary synthetic pornographic imagery” to its ban list

- ↑ Kuo, Lily (2018-11-09). "World's first AI news anchor unveiled in China". Retrieved 2020-07-13.

- ↑ Hamilton, Isobel Asher (2018-11-09). "China created what it claims is the first AI news anchor — watch it in action here". Retrieved 2020-07-13.

- ↑ Suwajanakorn, Supasorn; Seitz, Steven; Kemelmacher-Shlizerman, Ira (2017), Synthesizing Obama: Learning Lip Sync from Audio, University of Washington, retrieved 2020-07-13

- ↑ Giardina, Carolyn (2015-03-25). "'Furious 7' and How Peter Jackson's Weta Created Digital Paul Walker". The Hollywood Reporter. Retrieved 2020-07-13.

- ↑ ReForm - Hollywood's Creating Digital Clones (youtube). The Creators Project. 2020-07-13.

- ↑ Debevec, Paul. "Digital Ira SIGGRAPH 2013 Real-Time Live". Retrieved 2017-07-13.

- ↑ In this TED talk video at 00:04:59 you can see two clips, one with the real Emily shot with a real camera and one with a digital look-alike of Emily, shot with a simulation of a camera - Which is which is difficult to tell. Bruce Lawmen was scanned using USC light stage 6 in still position and also recorded running there on a w:treadmill. Many, many digital look-alikes of Bruce are seen running fluently and natural looking at the ending sequence of the TED talk video.

- ↑ Pighin, Frédéric. "Siggraph 2005 Digital Face Cloning Course Notes" (PDF). Retrieved 2020-06-26.

- ↑ https://ict.usc.edu/about/

- ↑ "Images de synthèse : palme de la longévité pour l'ombrage de Gouraud".

- ↑ Mechanismus der menschlichen Sprache nebst der Beschreibung seiner sprechenden Maschine ("Mechanism of the human speech with description of its speaking machine", J. B. Degen, Wien).

- ↑ History and Development of Speech Synthesis, Helsinki University of Technology, Retrieved on November 4, 2006

Cite error: <ref> tags exist for a group named "contacted", but no corresponding <references group="contacted"/> tag was found