Synthetic human-like fakes: Difference between revisions

Juho Kunsola (talk | contribs) (+ === 1st century === + w:Jesus teaches about things that are yet to come + w:Matthew 24 + w:The Sheep and the Goats''' + w:Mark 13) |

Juho Kunsola (talk | contribs) (→1st century: + w:2 Thessalonians 2 is the second chapter of the w:Second Epistle to the Thessalonians + See Biblical explanation - The books of Daniel and Revelations § 2 Thessalonians 2 + written caution about explicit written contents) |

||

| Line 156: | Line 156: | ||

*# '''[[w:The Sheep and the Goats]]''' and | *# '''[[w:The Sheep and the Goats]]''' and | ||

*# '''[[w:Mark 13]]'''. | *# '''[[w:Mark 13]]'''. | ||

*'''1st century''' | scripture | '''[[w:2 Thessalonians 2]]''' is the second chapter of the [[w:Second Epistle to the Thessalonians]]. It is traditionally attributed to [[w:Paul the Apostle]], with [[w:Saint Timothy]] as a co-author. See [[Biblical explanation - The books of Daniel and Revelations#2 Thessalonians 2|Biblical explanation - The books of Daniel and Revelations § 2 Thessalonians 2]] '''Caution''' to reader: contains '''explicit''' written information about the beasts | |||

== Footnotes == | == Footnotes == | ||

Revision as of 17:26, 27 June 2020

When the camera does not exist, but the subject being imaged with a simulation of a (movie) camera deceives the watcher to believe it is some living or dead person it is a digital look-alike.

When it cannot be determined by human testing whether some fake voice is a synthetic fake of some person's voice, or is it an actual recording made of that person's actual real voice, it is a digital sound-alike.

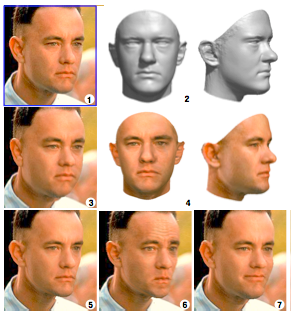

(1) Sculpting a morphable model to one single picture

(2) Produces 3D approximation

(4) Texture capture

(3) The 3D model is rendered back to the image with weight gain

(5) With weight loss

(6) Looking annoyed

(7) Forced to smile Image 2 by Blanz and Vettel – Copyright ACM 1999 – http://dl.acm.org/citation.cfm?doid=311535.311556 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

Digital look-alikes

Introduction to digital look-alikes

Original picture by Debevec et al. - Copyright ACM 2000 https://dl.acm.org/citation.cfm?doid=311779.344855

In the cinemas we have seen digital look-alikes for over 15 years. These digital look-alikes have "clothing" (a simulation of clothing is not clothing) or "superhero costumes" and "superbaddie costumes", and they don't need to care about the laws of physics, let alone laws of physiology. It is generally accepted that digital look-alikes made their public debut in the sequels of The Matrix i.e. w:The Matrix Reloaded and w:The Matrix Revolutions released in 2003. It can be considered almost certain, that it was not possible to make these before the year 1999, as the final piece of the puzzle to make a (still) digital look-alike that passes human testing, the reflectance capture over the human face, was made for the first time in 1999 at the w:University of Southern California and was presented to the crème de la crème of the computer graphics field in their annual gathering SIGGRAPH 2000.[1]

“Do you think that was Hugo Weaving's left cheekbone that Keanu Reeves punched in with his right fist?”

The problems with digital look-alikes

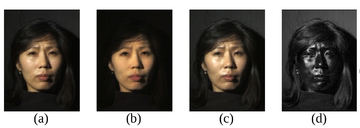

(a) Normal image in dot lighting

(b) Image of the diffuse reflection which is caught by placing a vertical polarizer in front of the light source and a horizontal in the front the camera

(c) Image of the highlight specular reflection which is caught by placing both polarizers vertically

(d) Subtraction of c from b, which yields the specular component

Images are scaled to seem to be the same luminosity.

Original image by Debevec et al. – Copyright ACM 2000 – https://dl.acm.org/citation.cfm?doid=311779.344855 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

Extremely unfortunately for the humankind, organized criminal leagues, that posses the weapons capability of making believable looking synthetic pornography, are producing on industrial production pipelines synthetic terror porn[footnote 1] by animating digital look-alikes and distributing it in the murky Internet in exchange for money stacks that are getting thinner and thinner as time goes by.

These industrially produced pornographic delusions are causing great humane suffering, especially in their direct victims, but they are also tearing our communities and societies apart, sowing blind rage, perceptions of deepening chaos, feelings of powerlessness and provoke violence. This hate illustration increases and strengthens hate thinking, hate speech, hate crimes and tears our fragile social constructions apart and with time perverts humankind's view of humankind into an almost unrecognizable shape, unless we interfere with resolve.

For these reasons the bannable raw materials i.e. covert models, needed to produce this disinformation terror on the information-industrial production pipelines, should be prohibited by law in order to protect humans from arbitrary abuse by criminal parties.

List of possible naked digital look-alike attacks

- The classic "portrayal of as if in involuntary sex"-attack. (Digital look-alike "cries")

- "Sexual preference alteration"-attack. (Digital look-alike "smiles")

How to counter synthetic porn: Adequate Porn Watcher AI (transcluded)

Adequate Porn Watcher AI (APW_AI) is an w:AI and w:computer vision concept to search for any and all porn that should not be by watching and modeling all porn ever found on the w:Internet thus effectively protecting humans by exposing covert naked digital look-alike attacks and also other contraband.

Obs. #A service identical to APW_AI used to exist - FacePinPoint.com

The method and the effect

The method by which APW_AI would be providing safety and security to its users, is that they can briefly upload a model they've gotten of themselves and then the APW_AI will either say nothing matching found or it will be of the opinion that something matching found.

If people are able to check whether there is synthetic porn that looks like themselves, this causes synthetic hate-illustration industrialists' product lose destructive potential and the attacks that happen are less destructive as they are exposed by the APW_AI and thus decimate the monetary value of these disinformation weapons to the criminals.

If you feel comfortable to leave your model with the good people at the benefactor for safekeeping you get alerted and help if you ever get attacked with a synthetic porn attack.

Rules

Looking up if matches are found for anyone else's model is forbidden and this should probably be enforced with a facial w:biometric w:facial recognition system app that checks that the model you want checked is yours and that you are awake.

Definition of adequacy

An adequate implementation should be nearly free of false positives, very good at finding true positives and able to process more porn than is ever uploaded.

What about the people in the porn-industry?

People who openly do porn can help by opting-in to help in the development by providing training material and material to test the AI on. People and companies who help in training the AI naturally get credited for their help.

There are of course lots of people-questions to this and those questions need to be identified by professionals of psychology and social sciences.

History

The idea of APW_AI occurred to User:Juho Kunsola on Friday 2019-07-12. Subsequently (the next day) this discovery caused the scrapping of the plea to ban convert modeling of human appearance as that would have rendered APW_AI legally impossible.

Countermeasures elsewhere

Partial transclusion from Organizations, studies and events against synthetic human-like fakes

Companies against synthetic filth

- Alecto AI at alectoai.com[1st seen in 1], a provider of an AI-based face information analytics, founded in 2021 in Palo Alto.

- Facenition.com, an NZ company founded in 2019 and ingenious method to hunt for the fake human-like images. Probably has been purchased, mergered or licensed by ThatsMyFace.com

- ThatsMyFace.com[1st seen in 1], an Australian company.[contacted 1] Previously, another company in the USA had this same name and domain name.[2]

A service identical to APW_AI used to exist - FacePinPoint.com

Partial transclusion from FacePinPoint.com

FacePinPoint.com was a for-a-fee service from 2017 to 2021 for pointing out where in pornography sites a particular face appears, or in the case of synthetic pornography, a digital look-alike makes make-believe of a face or body appearing.[contacted 2]The inventor and founder of FacePinPoint.com, Mr. Lionel Hagege registered the domain name in 2015[3], when he set out to research the feasibility of his action plan idea against non-consensual pornography.[4] The description of how FacePinPoint.com worked is the same as Adequate Porn Watcher AI (concept)'s description.

Resources

Tools

- w:PhotoDNA is an image-identification technology used for detecting w:child pornography and other illegal content reported to the w:National Center for Missing & Exploited Children (NCMEC) as required by law.[5] It was developed by w:Microsoft Research and w:Hany Farid, professor at w:Dartmouth College, beginning in 2009. (Wikipedia)

- The w:Child abuse image content list (CAIC List) is a list of URLs and image hashes provided by the w:Internet Watch Foundation to its partners to enable the blocking of w:child pornography & w:criminally obscene adult content in the UK and by major international technology companies. (Wikipedia).

Legal

Traditional porn-blocking

Traditional porn-blocking done by w:some countries seems to use w:DNS to deny access to porn sites by checking if the domain name matches an item in a porn sites database and if it is there then it returns an unroutable address, usually w:0.0.0.0.

Topics on github.com

- Topic "porn-block" on github.com (8 repositories as of 2020-09)[1st seen in 2]

- Topic "pornblocker" on github.com (13 repositories as of 2020-09)[1st seen in 3]

- Topic "porn-filter" on github.com (35 repositories as of 2020-09)[1st seen in 4]

Curated lists and databases

- 'Awesome-Adult-Filtering-Accountability' a list at github.com curated by wesinator - a list of tools and resources for adult content/porn accountability and filtering[1st seen in 2]

- 'Pornhosts' at github.com by Import-External-Sources is a hosts-file formatted file of the w:Response policy zone (RPZ) zone file. It states itself as " a consolidated anti porn hosts file" and states is mission as "an endeavour to find all porn domains and compile them into a single hosts to allow for easy blocking of porn on your local machine or on a network."[1st seen in 2]

- 'Amdromeda blocklist for Pi-hole' at github.com by Amdromeda[1st seen in 2] lists 50MB worth of just porn host names 1 (16.6MB) 2 (16.8MB) 3 (16.9MB) (As of 2020-09)

- 'Pihole-blocklist' at github.com by mhakim[1st seen in 4] 1

- 'superhostsfile' at github.com by universalbyte is an ongoing effort to chart out "negative" hosts.[1st seen in 3]

- 'hosts' at github.com by StevenBlack is a hosts file for negative sites. It is updated constantly from these sources and it lists 559k (1.64MB) of porn and other dodgy hosts (as of 2020-09)

- 'Porn-domains' at github.com by Bon appétit was (as of 2020-09) last updated in March 2019 and lists more than 22k domains.

Porn blocking services

- w:Pi-hole - https://pi-hole.net/ - Network-wide Ad Blocking

Software for nudity detection

- 'PornDetector' consists of two python porn images (nudity) detectors at github.com by bakwc and are both written in w:Python (programming language)[1st seen in 4]. pcr.py uses w:scikit-learn and the w:OpenCV Open Source Computer Vision Library, whereas nnpcr.py uses w:TensorFlow and reaches a higher accuracy.

- 'Laravel 7 Google Vision restringe pornografia detector de faces' porn restriction app in Portuguese at github.com by thelesson that utilizes Google Vision API to help site maintainers stop users from uploading porn has been written for the for MiniBlog w:Laravel blog app.

Links regarding pornography censorship

- w:Pornography laws by region

- w:Internet pornography

- w:Legal status of Internet pornography

- w:Sex and the law

Against pornography

- Reasons for w:opposition to pornography include w:religious views on pornography, w:feminist views of pornography, and claims of w:effects of pornography, such as w:pornography addiction. (Wikipedia as of 2020-09-19)

Technical means of censorship and how to circumvent

- w:Internet censorship and w:internet censorship circumvention

- w:Content-control software (Internet filter), a common approach to w:parental control.

- w:Accountability software

- w:Employee monitoring is often automated using w:employee monitoring software

- A w:wordfilter (sometimes referred to as just "filter" or "censor") is a script typically used on w:Internet forums or w:chat rooms that automatically scans users' posts or comments as they are submitted and automatically changes or w:censors particular words or phrases. (Wikipedia as of 2020-09)

- w:Domain fronting is a technique for w:internet censorship circumvention that uses different w:domain names in different communication layers of an w:HTTPS connection to discreetly connect to a different target domain than is discernable to third parties monitoring the requests and connections. (Wikipedia 2020-09-22)

- w:Internet censorship in China and w:some tips to how to evade internet censorship in China

Sources for technologies

|

| A map of technologies courtesy of Samsung Next, linked from 'Why it’s time to change the conversation around synthetic media' at venturebeat.com[1st seen in 5] |

See also

Image 1: Separating specular and diffuse reflected light (a) Normal image in dot lighting (b) Image of the diffuse reflection which is caught by placing a vertical polarizer in front of the light source and a horizontal in the front the camera (c) Image of the highlight specular reflection which is caught by placing both polarizers vertically (d) The difference of c and b yields the specular highlight component Images are scaled to seem to be the same luminosity. Original image by Debevec et al. – Copyright ACM 2000 – https://dl.acm.org/citation.cfm?doid=311779.344855 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. |

Biblical connection - Revelation 13 and Daniel 7, wherein Daniel 7 and Revelation 13 we are warned of this age of industrial filth. In Revelation 19:20 it says that the beast is taken prisoner, can we achieve this without 'APW_AI?  'Saint John on Patmos' pictures w:John of Patmos on w:Patmos writing down the visions to make the w:Book of Revelation 'Saint John on Patmos' from folio 17 of the w:Très Riches Heures du Duc de Berry (1412-1416) by the w:Limbourg brothers. Currently located at the w:Musée Condé 40km north of Paris, France. |

References

- ↑ Debevec, Paul (2000). "Acquiring the reflectance field of a human face". Proceedings of the 27th annual conference on Computer graphics and interactive techniques - SIGGRAPH '00. ACM. pp. 145–156. doi:10.1145/344779.344855. ISBN 978-1581132083. Retrieved 2017-05-24.

- ↑ https://www.crunchbase.com/organization/thatsmyface-com

- ↑ whois facepinpoint.com

- ↑ https://www.facepinpoint.com/aboutus

- ↑ "Microsoft tip led police to arrest man over child abuse images". w:The Guardian. 2014-08-07.

1st seen in

- ↑ 1.0 1.1 https://spectrum.ieee.org/deepfake-porn

- ↑ 2.0 2.1 2.2 2.3 Seen first in https://github.com/topics/porn-block, meta for actual use. The topic was stumbled upon.

- ↑ 3.0 3.1 Seen first in https://github.com/topics/pornblocker Saw this originally when looking at https://github.com/topics/porn-block Topic

- ↑ 4.0 4.1 4.2 Seen first in https://github.com/topics/porn-filter Saw this originally when looking at https://github.com/topics/porn-block Topic

- ↑ venturebeat.com found via some Facebook AI & ML group or page yesterday. Sorry, don't know precisely right now.

Digital sound-alikes

Living people can defend[footnote 2] themselves against digital sound-alike by denying the things the digital sound-alike says if they are presented to the target, but dead people cannot. Digital sound-alikes offer criminals new disinformation attack vectors and wreak havoc on provability.

Timeline of digital sound-alikes

- In 2016 w:Adobe Inc.'s Voco, an unreleased prototype, was publicly demonstrated in 2016. (View and listen to Adobe MAX 2016 presentation of Voco)

- In 2016 w:DeepMind's w:WaveNet owned by w:Google also demonstrated ability to steal people's voices

- In 2018 Conference on Neural Information Processing Systems the work 'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis' (at arXiv.org) was presented. The pre-trained model is able to steal voices from a sample of only 5 seconds with almost convincing results

- As of 2019 Symantec research knows of 3 cases where digital sound-alike technology has been used for crimes.[1]

'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis' 2018 by Google Research (external transclusion)

The Iframe below is transcluded from 'Audio samples from "Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis"' at google.gituhub.io, the audio samples of a sound-like-anyone machine presented as at the 2018 w:NeurIPS conference by Google researchers.

Documented digital sound-alike attacks

- 'An artificial-intelligence first: Voice-mimicking software reportedly used in a major theft', a 2019 Washington Post article

Possible legal response: Outlawing digital sound-alikes (transcluded)

Transcluded from Juho's proposal on banning digital sound-alikes

Example of a hypothetical 4-victim digital sound-alike attack

A very simple example of a digital sound-alike attack is as follows:

Someone puts a digital sound-alike to call somebody's voicemail from an unknown number and to speak for example illegal threats. In this example there are at least two victims:

- Victim #1 - The person whose voice has been stolen into a covert model and a digital sound-alike made from it to frame them for crimes

- Victim #2 - The person to whom the illegal threat is presented in a recorded form by a digital sound-alike that deceptively sounds like victim #1

- Victim #3 - It could also be viewed that victim #3 is our law enforcement systems as they are put to chase after and interrogate the innocent victim #1

- Victim #4 - Our judiciary which prosecutes and possibly convicts the innocent victim #1.

Thus it is high time to act and to criminalize the covert modeling of human appearance and voice!

Examples of speech synthesis software not quite able to fool a human yet

Some other contenders to create digital sound-alikes are though, as of 2019, their speech synthesis in most use scenarios does not yet fool a human because the results contain tell tale signs that give it away as a speech synthesizer.

- Lyrebird.ai (listen)

- CandyVoice.com (test with your choice of text)

- Merlin, a w:neural network based speech synthesis system by the Centre for Speech Technology Research at the w:University of Edinburgh

The below video 'This AI Clones Your Voice After Listening for 5 Seconds' by '2 minute papers' describes the voice thieving machine presented by Google Research in NeurIPS 2018.

Media perhaps about synthetic human-like fakes

This is a chronological listing of media that are probably to do with synthetic human-like fakes.

6th century BC

Daniel 7, Daniel's vision of the three beasts Dan 7:1-6 and the fourth beast Dan 7:7-8 from the sea and the Ancient of DaysDan 7:9-10

- w:6th century BC | scripture | w:Daniel (biblical figure) was in w:Babylonian captivity when he had his visions where God warned us of synthetic human-like fakes first.

- His testimony was put into written form in the #3rd century BC.

3rd century BC

- w:3rd century BC | scripture | The w:Book of Daniel was put in writing.

- See Biblical explanation - The books of Daniel and Revelations § Daniel 7. Caution to reader: contains explicit written information about the beasts.

1st century

- w:1st century | scripture | w:Jesus teaches about things that are yet to come in

- 1st century | scripture | w:2 Thessalonians 2 is the second chapter of the w:Second Epistle to the Thessalonians. It is traditionally attributed to w:Paul the Apostle, with w:Saint Timothy as a co-author. See Biblical explanation - The books of Daniel and Revelations § 2 Thessalonians 2 Caution to reader: contains explicit written information about the beasts

Footnotes

- ↑ It is terminologically more precise, more inclusive and more useful to talk about 'synthetic terror porn', if we want to talk about things with their real names, than 'synthetic rape porn', because also synthesizing recordings of consentual looking sex scenes can be terroristic in intent.

- ↑ Whether a suspect can defend against faked synthetic speech that sounds like him/her depends on how up-to-date the judiciary is. If no information and instructions about digital sound-alikes have been given to the judiciary, they likely will not believe the defense of denying that the recording is of the suspect's voice.

References

Cite error: <ref> tags exist for a group named "contacted", but no corresponding <references group="contacted"/> tag was found