Synthetic human-like fakes: Difference between revisions

Juho Kunsola (talk | contribs) |

Juho Kunsola (talk | contribs) m (language) |

||

| (118 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

'''Definitions''' | |||

<section begin=definitions-of-synthetic-human-like-fakes /> | <section begin=definitions-of-synthetic-human-like-fakes /> | ||

When the '''[[Glossary#No camera|camera does not exist]]''', but the subject being imaged with a simulation of a (movie) camera deceives the watcher to believe it is some living or dead person it is a '''[[#Digital look-alikes|digital look-alike]]'''. | When the '''[[Glossary#No camera|camera does not exist]]''', but the subject being imaged with a simulation of a (movie) camera deceives the watcher to believe it is some living or dead person it is a '''[[Synthetic human-like fakes#Digital look-alikes|digital look-alike]]'''. | ||

When it cannot be determined by human testing or media forensics whether some fake voice is a synthetic fake of some person's voice, or is it an actual recording made of that person's actual real voice, it is a pre-recorded '''[[#Digital sound-alikes|digital sound-alike]]'''. | In 2017-2018 this started to be referred to as [[w:deepfake]], even though altering video footage of humans with a computer with a deceiving effect is actually 20 yrs older than the name "deep fakes" or "deepfakes".<ref name="Bohacek and Farid 2022 protecting against fakes"> | ||

{{cite journal | |||

| last1 = Boháček | |||

| first1 = Matyáš | |||

| last2 = Farid | |||

| first2 = Hany | |||

| date = 2022-11-23 | |||

| title = Protecting world leaders against deep fakes using facial, gestural, and vocal mannerisms | |||

| url = https://www.pnas.org/doi/10.1073/pnas.2216035119 | |||

| journal = [[w:Proceedings of the National Academy of Sciences of the United States of America]] | |||

| volume = 119 | |||

| issue = 48 | |||

| pages = | |||

| doi = 10.1073/pnas.221603511 | |||

| access-date = 2023-01-05 | |||

}} | |||

</ref><ref name="Bregler1997"> | |||

{{cite journal | |||

| last1 = Bregler | |||

| first1 = Christoph | |||

| last2 = Covell | |||

| first2 = Michele | |||

| last3 = Slaney | |||

| first3 = Malcolm | |||

| date = 1997-08-03 | |||

| title = Video Rewrite: Driving Visual Speech with Audio | |||

| url = https://www2.eecs.berkeley.edu/Research/Projects/CS/vision/human/bregler-sig97.pdf | |||

| journal = SIGGRAPH '97: Proceedings of the 24th annual conference on Computer graphics and interactive techniques | |||

| volume = | |||

| issue = | |||

| pages = 353-360 | |||

| doi = 10.1145/258734.258880 | |||

| access-date = 2022-09-09 | |||

}} | |||

</ref> | |||

When it cannot be determined by human testing or media forensics whether some fake voice is a synthetic fake of some person's voice, or is it an actual recording made of that person's actual real voice, it is a pre-recorded '''[[Synthetic human-like fakes#Digital sound-alikes|digital sound-alike]]'''. This is now commonly referred to as [[w:audio deepfake]]. | |||

'''Real-time digital look-and-sound-alike''' in a video call was used to defraud a substantial amount of money in 2023.<ref name="Reuters real-time digital look-and-sound-alike crime 2023"> | |||

{{cite web | |||

| url = https://www.reuters.com/technology/deepfake-scam-china-fans-worries-over-ai-driven-fraud-2023-05-22/ | |||

| title = 'Deepfake' scam in China fans worries over AI-driven fraud | |||

| last = | |||

| first = | |||

| date = 2023-05-22 | |||

| website = [[w:Reuters.com]] | |||

| publisher = [[w:Reuters]] | |||

| access-date = 2023-06-05 | |||

| quote = | |||

}} | |||

</ref> | |||

<section end=definitions-of-synthetic-human-like-fakes /> | <section end=definitions-of-synthetic-human-like-fakes /> | ||

::[[Synthetic human-like fakes|Read more about '''synthetic human-like fakes''']], see and support '''[[organizations and events against synthetic human-like fakes]]''' and what they are doing, what kinds of '''[[Laws against synthesis and other related crimes]]''' have been formulated, [[Synthetic human-like fakes#Timeline of synthetic human-like fakes|examine the SSFWIKI '''timeline''' of synthetic human-like fakes]] or [[Mediatheque|view the '''Mediatheque''']]. | |||

[[File:Screenshot at 27s of a moving digital-look-alike made to appear Obama-like by Monkeypaw Productions and Buzzfeed 2018.png|thumb|right|480px|link=Mediatheque/2018/Obama's appearance thieved - a public service announcement digital look-alike by Monkeypaw Productions and Buzzfeed|{{#lst:Mediatheque|Obama-like-fake-2018}}]] | [[File:Screenshot at 27s of a moving digital-look-alike made to appear Obama-like by Monkeypaw Productions and Buzzfeed 2018.png|thumb|right|480px|link=Mediatheque/2018/Obama's appearance thieved - a public service announcement digital look-alike by Monkeypaw Productions and Buzzfeed|{{#lst:Mediatheque|Obama-like-fake-2018}}]] | ||

| Line 44: | Line 101: | ||

<small>[[:File:Deb-2000-reflectance-separation.png|Original picture]] by [[w:Paul Debevec]] et al. - Copyright ACM 2000 https://dl.acm.org/citation.cfm?doid=311779.344855</small>]] | <small>[[:File:Deb-2000-reflectance-separation.png|Original picture]] by [[w:Paul Debevec]] et al. - Copyright ACM 2000 https://dl.acm.org/citation.cfm?doid=311779.344855</small>]] | ||

In the cinemas we have seen digital look-alikes for over | In the cinemas we have seen digital look-alikes for over 20 years. These digital look-alikes have "clothing" (a simulation of clothing is not clothing) or "superhero costumes" and "superbaddie costumes", and they don't need to care about the laws of physics, let alone laws of physiology. It is generally accepted that digital look-alikes made their public debut in the sequels of The Matrix i.e. [[w:The Matrix Reloaded]] and [[w:The Matrix Revolutions]] released in 2003. It can be considered almost certain, that it was not possible to make these before the year 1999, as the final piece of the puzzle to make a (still) digital look-alike that passes human testing, the [[Glossary#Reflectance capture|reflectance capture]] over the human face, was made for the first time in 1999 at the [[w:University of Southern California]] and was presented to the crème de la crème | ||

of the computer graphics field in their annual gathering SIGGRAPH 2000.<ref name="Deb2000"> | of the computer graphics field in their annual gathering SIGGRAPH 2000.<ref name="Deb2000"> | ||

{{cite book | {{cite book | ||

| Line 67: | Line 124: | ||

=== The problems with digital look-alikes === | === The problems with digital look-alikes === | ||

Extremely unfortunately for the humankind, organized criminal leagues, that posses the '''weapons capability''' of making believable looking '''synthetic pornography''', are producing on industrial production pipelines '''synthetic | Extremely unfortunately for the humankind, organized criminal leagues, that posses the '''weapons capability''' of making believable looking '''synthetic pornography''', are producing on industrial production pipelines '''terroristic synthetic pornography'''<ref group="footnote" name="About the term terroristic synthetic pornography">It is terminologically more precise, more inclusive and more useful to talk about 'terroristic synthetic pornography', if we want to talk about things with their real names, than 'synthetic rape porn', because also synthesizing recordings of consentual looking sex scenes can be terroristic in intent.</ref> by animating digital look-alikes and distributing it in the murky Internet in exchange for money stacks that are getting thinner and thinner as time goes by. | ||

These industrially produced pornographic delusions are causing great human suffering, especially in their direct victims, but they are also tearing our communities and societies apart, sowing blind rage, perceptions of deepening chaos, feelings of powerlessness and provoke violence. | |||

These kinds of '''hate illustration''' increases and strengthens hate feeling, hate thinking, hate speech and hate crimes and tears our fragile social constructions apart and with time perverts humankind's view of humankind into an almost unrecognizable shape, unless we interfere with resolve. | |||

'''Children-like sexual abuse images''' | |||

Sadly by 2023 there is a market for synthetic human-like sexual abuse material that looks like children. See [https://www.bbc.com/news/uk-65932372 ''''''Illegal trade in AI child sex abuse images exposed'''''' at bbc.com] 2023-06-28 reports [[w:Stable Diffusion]] being abused to produce this kind of images. The [[w:Internet Watch Foundation]] also reports on the alarming existence of production of synthetic human-like sex abuse material portraying minors. See [https://www.iwf.org.uk/news-media/news/prime-minister-must-act-on-threat-of-ai-as-iwf-sounds-alarm-on-first-confirmed-ai-generated-images-of-child-sexual-abuse/ ''''''Prime Minister must act on threat of AI as IWF ‘sounds alarm’ on first confirmed AI-generated images of child sexual abuse'''''' at iwf.org.uk] (2023-08-18) | |||

=== Fixing the problems from digital look-alikes === | |||

We need to move on 3 fields: [[Laws against synthesis and other related crimes|legal]], technological and cultural. | |||

'''Technological''': Computer vision system like [[FacePinPoint.com]] for seeking unauthorized pornography / nudes used to exist 2017-2021 and could be revived if funding is found. It was a service practically identical with SSFWIKI original concept [[Adequate Porn Watcher AI (concept)]]. | |||

'''Legal''': Legislators around the planet have been waking up to this reality that not everything that seems a video of people is a video of people and various laws have been passed to protect humans and humanity from the menaces of synthetic human-like fakes, mostly digital look-alikes so far, but hopefully humans will be protected also fro other aspects of synthetic human-like fakes by laws. See [[Laws against synthesis and other related crimes]] | |||

=== Age analysis and rejuvenating and aging syntheses === | === Age analysis and rejuvenating and aging syntheses === | ||

| Line 109: | Line 172: | ||

== Digital sound-alikes == | == Digital sound-alikes == | ||

=== University of Florida published an antidote to synthetic human-like fake voices in 2022 === | |||

'''2022''' saw a brilliant '''<font color="green">counter-measure</font>''' presented to peers at the 31st [[w:USENIX]] Security Symposium 10-12 August 2022 by [[w:University of Florida]] <u><big>'''[[Detecting deep-fake audio through vocal tract reconstruction]]'''</big></u>. | |||

The university's foundation has applied for a patent and let us hope that they will [[w:copyleft]] the patent as this protective method needs to be rolled out to protect the humanity. | |||

'''Below transcluded [[Detecting deep-fake audio through vocal tract reconstruction|from the article]]''' | |||

{{#lst:Detecting deep-fake audio through vocal tract reconstruction|what-is-it}} {{#lst:Detecting deep-fake audio through vocal tract reconstruction|original-reporting}} | |||

'''This new counter-measure needs to be rolled out to humans to protect humans against the fake human-like voices.''' | |||

{{#lst:Detecting deep-fake audio through vocal tract reconstruction|embed}} | |||

=== On known history of digital sound-alikes === | |||

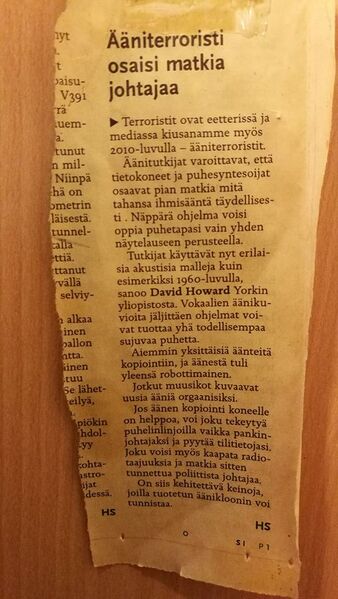

[[File:Helsingin-Sanomat-2012-David-Martin-Howard-of-University-of-York-on-apporaching-digital-sound-alikes.jpg|right|thumb|338px|A picture of a cut-away titled "''Voice-terrorist could mimic a leader''" from a 2012 [[w:Helsingin Sanomat]] warning that the sound-like-anyone machines are approaching. Thank you to homie [https://pure.york.ac.uk/portal/en/researchers/david-martin-howard(ecfa9e9e-1290-464f-981a-0c70a534609e).html Prof. David Martin Howard] of the [[w:University of York]], UK and the anonymous editor for the heads-up.]] | [[File:Helsingin-Sanomat-2012-David-Martin-Howard-of-University-of-York-on-apporaching-digital-sound-alikes.jpg|right|thumb|338px|A picture of a cut-away titled "''Voice-terrorist could mimic a leader''" from a 2012 [[w:Helsingin Sanomat]] warning that the sound-like-anyone machines are approaching. Thank you to homie [https://pure.york.ac.uk/portal/en/researchers/david-martin-howard(ecfa9e9e-1290-464f-981a-0c70a534609e).html Prof. David Martin Howard] of the [[w:University of York]], UK and the anonymous editor for the heads-up.]] | ||

| Line 116: | Line 193: | ||

Then in '''2018''' at the '''[[w:Conference on Neural Information Processing Systems]]''' (NeurIPS) the work [http://papers.nips.cc/paper/7700-transfer-learning-from-speaker-verification-to-multispeaker-text-to-speech-synthesis 'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis'] ([https://arxiv.org/abs/1806.04558 at arXiv.org]) was presented. The pre-trained model is able to steal voices from a sample of only '''5 seconds''' with almost convincing results | Then in '''2018''' at the '''[[w:Conference on Neural Information Processing Systems]]''' (NeurIPS) the work [http://papers.nips.cc/paper/7700-transfer-learning-from-speaker-verification-to-multispeaker-text-to-speech-synthesis 'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis'] ([https://arxiv.org/abs/1806.04558 at arXiv.org]) was presented. The pre-trained model is able to steal voices from a sample of only '''5 seconds''' with almost convincing results | ||

The Iframe below is transcluded from [https://google.github.io/tacotron/publications/speaker_adaptation/ 'Audio samples from "Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis"' at google.gituhub.io], the audio samples of a sound-like-anyone machine presented as at the 2018 [[w:NeurIPS]] conference by Google researchers. | The Iframe below is transcluded from [https://google.github.io/tacotron/publications/speaker_adaptation/ ''''''Audio samples from "Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis"'''''' at google.gituhub.io], the audio samples of a sound-like-anyone machine presented as at the 2018 [[w:NeurIPS]] conference by Google researchers. | ||

Have a listen. | |||

{{#Widget:Iframe - Audio samples from Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis by Google Research}} | {{#Widget:Iframe - Audio samples from Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis by Google Research}} | ||

| Line 123: | Line 202: | ||

<section end=GoogleTransferLearning2018 /> | <section end=GoogleTransferLearning2018 /> | ||

''' Reporting on the sound-like-anyone-machines ''' | |||

* [https://www.forbes.com/sites/bernardmarr/2019/05/06/artificial-intelligence-can-now-copy-your-voice-what-does-that-mean-for-humans/#617f6d872a2a '''"Artificial Intelligence Can Now Copy Your Voice: What Does That Mean For Humans?"''' May 2019 reporting at forbes.com] on [[w:Baidu Research]]'es attempt at the sound-like-anyone-machine demonstrated at the 2018 [[w:NeurIPS]] conference. | |||

The to the right [https://www.youtube.com/watch?v=0sR1rU3gLzQ video 'This AI Clones Your Voice After Listening for 5 Seconds' by '2 minute papers' at YouTube] describes the voice thieving machine presented by Google Research in [[w:NeurIPS|w:NeurIPS]] 2018. | The to the right [https://www.youtube.com/watch?v=0sR1rU3gLzQ video 'This AI Clones Your Voice After Listening for 5 Seconds' by '2 minute papers' at YouTube] describes the voice thieving machine presented by Google Research in [[w:NeurIPS|w:NeurIPS]] 2018. | ||

| Line 132: | Line 215: | ||

==== 2019 digital sound-alike enabled fraud ==== | ==== 2019 digital sound-alike enabled fraud ==== | ||

By 2019 digital sound-alike anyone technology found its way to the hands of criminals. In '''2019''' [[w:NortonLifeLock|Symantec]] researchers knew of 3 cases where digital sound-alike technology had been used for '''[[w:crime]]''' | By 2019 digital sound-alike anyone technology found its way to the hands of criminals. In '''2019''' [[w:NortonLifeLock|Symantec]] researchers knew of 3 cases where digital sound-alike technology had been used for '''[[w:crime]]'''.<ref name="Washington Post reporting on 2019 digital sound-alike fraud" /> | ||

Of these crimes the most publicized was a fraud case in March 2019 where 220,000€ were defrauded with the use of a real-time digital sound-alike.<ref name="WSJ original reporting on 2019 digital sound-alike fraud" /> The company that was the victim of this fraud had bought some kind of cyberscam insurance from French insurer [[w:Euler Hermes]] and the case came to light when Mr. Rüdiger Kirsch of Euler Hermes informed [[w:The Wall Street Journal]] about it.<ref name="Forbes reporting on 2019 digital sound-alike fraud" /> | Of these crimes the most publicized was a fraud case in March 2019 where 220,000€ were defrauded with the use of a real-time digital sound-alike.<ref name="WSJ original reporting on 2019 digital sound-alike fraud" /> The company that was the victim of this fraud had bought some kind of cyberscam insurance from French insurer [[w:Euler Hermes]] and the case came to light when Mr. Rüdiger Kirsch of Euler Hermes informed [[w:The Wall Street Journal]] about it.<ref name="Forbes reporting on 2019 digital sound-alike fraud" /> | ||

''' Reporting on the 2019 digital sound-alike enabled fraud ''' | |||

* [https://www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402 '''''Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case''''' at wsj.com] original reporting, date unknown, updated 2019-08-30<ref name="WSJ original reporting on 2019 digital sound-alike fraud"> | * [https://www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402 '''''Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case''''' at wsj.com] original reporting, date unknown, updated 2019-08-30<ref name="WSJ original reporting on 2019 digital sound-alike fraud"> | ||

| Line 173: | Line 257: | ||

|publisher= [[w:Washington Post]] | |publisher= [[w:Washington Post]] | ||

|access-date= 2019-07-22 | |access-date= 2019-07-22 | ||

|quote= }} | |quote=Researchers at the cybersecurity firm Symantec said they have found at least three cases of executives’ voices being mimicked to swindle companies. Symantec declined to name the victim companies or say whether the Euler Hermes case was one of them, but it noted that the losses in one of the cases totaled millions of dollars.}} | ||

</ref> | </ref> | ||

* [https://www.forbes.com/sites/jessedamiani/2019/09/03/a-voice-deepfake-was-used-to-scam-a-ceo-out-of-243000/ '''''A Voice Deepfake Was Used To Scam A CEO Out Of $243,000''''' at forbes.com], 2019-09-03 reporting<ref name="Forbes reporting on 2019 digital sound-alike fraud"> | * [https://www.forbes.com/sites/jessedamiani/2019/09/03/a-voice-deepfake-was-used-to-scam-a-ceo-out-of-243000/ '''''A Voice Deepfake Was Used To Scam A CEO Out Of $243,000''''' at forbes.com], 2019-09-03 reporting<ref name="Forbes reporting on 2019 digital sound-alike fraud"> | ||

| Line 187: | Line 271: | ||

|access-date=2022-01-01 | |access-date=2022-01-01 | ||

|quote=According to a new report in The Wall Street Journal, the CEO of an unnamed UK-based energy firm believed he was on the phone with his boss, the chief executive of firm’s the German parent company, when he followed the orders to immediately transfer €220,000 (approx. $243,000) to the bank account of a Hungarian supplier. In fact, the voice belonged to a fraudster using AI voice technology to spoof the German chief executive. Rüdiger Kirsch of Euler Hermes Group SA, the firm’s insurance company, shared the information with WSJ.}} | |quote=According to a new report in The Wall Street Journal, the CEO of an unnamed UK-based energy firm believed he was on the phone with his boss, the chief executive of firm’s the German parent company, when he followed the orders to immediately transfer €220,000 (approx. $243,000) to the bank account of a Hungarian supplier. In fact, the voice belonged to a fraudster using AI voice technology to spoof the German chief executive. Rüdiger Kirsch of Euler Hermes Group SA, the firm’s insurance company, shared the information with WSJ.}} | ||

</ref> | |||

==== 2020 digital sound-alike fraud attempt ==== | |||

In June 2020 fraud was attempted with a poor quality pre-recorded digital sound-alike with delivery method was voicemail. ([https://soundcloud.com/jason-koebler/redacted-clip '''Listen to a redacted clip''' at soundcloud.com]) The recipient in a tech company didn't believe the voicemail to be real and alerted the company and they realized that someone tried to scam them. The company called in Nisos to investigate the issue. Nisos analyzed the evidence and they were certain it was a fake, but had aspects of a cut-and-paste job to it. Nisos prepared [https://www.nisos.com/blog/synthetic-audio-deepfake/ a report titled '''''"The Rise of Synthetic Audio Deepfakes"''''' at nisos.com] on the issue and shared it with Motherboard, part of [[w:Vice (magazine)]] prior to its release.<ref name="Vice reporting on 2020 digital sound-alike fraud attempt"> | |||

{{cite web | |||

|url=https://www.vice.com/en/article/pkyqvb/deepfake-audio-impersonating-ceo-fraud-attempt | |||

|title=Listen to This Deepfake Audio Impersonating a CEO in Brazen Fraud Attempt | |||

|last=Franceschi-Bicchierai | |||

|first=Lorenzo | |||

|date=2020-07-23 | |||

|website=[[w:Vice.com]] | |||

|publisher=[[w:Vice (magazine)]] | |||

|access-date=2022-01-03 | |||

|quote=}} | |||

</ref> | </ref> | ||

==== 2021 digital sound-alike enabled fraud ==== | ==== 2021 digital sound-alike enabled fraud ==== | ||

=== | <section begin=2021 digital sound-alike enabled fraud />The 2nd publicly known fraud done with a digital sound-alike<ref group="1st seen in" name="2021 digital sound-alike fraud case">https://www.reddit.com/r/VocalSynthesis/</ref> took place on Friday 2021-01-15. A bank in Hong Kong was manipulated to wire money to numerous bank accounts by using a voice stolen from one of the their client company's directors. They managed to defraud $35 million of the U.A.E. based company's money.<ref name="Forbes reporting on 2021 digital sound-alike fraud">https://www.forbes.com/sites/thomasbrewster/2021/10/14/huge-bank-fraud-uses-deep-fake-voice-tech-to-steal-millions/</ref>. This case came into light when Forbes saw [https://www.documentcloud.org/documents/21085009-hackers-use-deep-voice-tech-in-400k-theft a document] where the U.A.E. financial authorities were seeking administrative assistance from the US authorities towards recovering a small portion of the defrauded money that had been sent to bank accounts in the USA.<ref name="Forbes reporting on 2021 digital sound-alike fraud" /> | ||

'''Reporting on the 2021 digital sound-alike enabled fraud''' | |||

* [https://www.forbes.com/sites/thomasbrewster/2021/10/14/huge-bank-fraud-uses-deep-fake-voice-tech-to-steal-millions/ '''''Fraudsters Cloned Company Director’s Voice In $35 Million Bank Heist, Police Find''''' at forbes.com] 2021-10-14 original reporting | |||

* [https://www.unite.ai/deepfaked-voice-enabled-35-million-bank-heist-in-2020/ '''''Deepfaked Voice Enabled $35 Million Bank Heist in 2020''''' at unite.ai]<ref group="1st seen in" name="2021 digital sound-alike fraud case" /> reporting updated on 2021-10-15 | |||

* [https://www.aiaaic.org/aiaaic-repository/ai-and-algorithmic-incidents-and-controversies/usd-35m-voice-cloning-heist '''''USD 35m voice cloning heist''''' at aiaaic.org], October 2021 AIAAIC repository entry | |||

<section end=2021 digital sound-alike enabled fraud /> | |||

'''More fraud cases with digital sound-alikes''' | |||

* [https://www.washingtonpost.com/technology/2023/03/05/ai-voice-scam/ '''''They thought loved ones were calling for help. It was an AI scam.''''' at washingtonpost.com], March 2023 reporting | |||

=== Example of a hypothetical 4-victim digital sound-alike attack === | === Example of a hypothetical 4-victim digital sound-alike attack === | ||

| Line 212: | Line 314: | ||

# Victim #3 - It could also be viewed that victim #3 is our law enforcement systems as they are put to chase after and interrogate the innocent victim #1 | # Victim #3 - It could also be viewed that victim #3 is our law enforcement systems as they are put to chase after and interrogate the innocent victim #1 | ||

# Victim #4 - Our judiciary which prosecutes and possibly convicts the innocent victim #1. | # Victim #4 - Our judiciary which prosecutes and possibly convicts the innocent victim #1. | ||

=== Examples of speech synthesis software not quite able to fool a human yet === | === Examples of speech synthesis software not quite able to fool a human yet === | ||

| Line 222: | Line 322: | ||

* '''[https://cstr-edinburgh.github.io/merlin/ Merlin]''', a [[w:neural network]] based speech synthesis system by the Centre for Speech Technology Research at the [[w:University of Edinburgh]] | * '''[https://cstr-edinburgh.github.io/merlin/ Merlin]''', a [[w:neural network]] based speech synthesis system by the Centre for Speech Technology Research at the [[w:University of Edinburgh]] | ||

* [https://papers.nips.cc/paper/8206-neural-voice-cloning-with-a-few-samples ''''Neural Voice Cloning with a Few Samples''' at papers.nips.cc], [[w:Baidu Research]]'es shot at sound-like-anyone-machine did not convince in '''2018''' | * [https://papers.nips.cc/paper/8206-neural-voice-cloning-with-a-few-samples ''''Neural Voice Cloning with a Few Samples''' at papers.nips.cc], [[w:Baidu Research]]'es shot at sound-like-anyone-machine did not convince in '''2018''' | ||

=== Temporal limit of digital sound-alikes === | === Temporal limit of digital sound-alikes === | ||

| Line 249: | Line 346: | ||

[[File:Spectrogram-19thC.png|thumb|right|640px|A [[w:spectrogram]] of a male voice saying 'nineteenth century']] | [[File:Spectrogram-19thC.png|thumb|right|640px|A [[w:spectrogram]] of a male voice saying 'nineteenth century']] | ||

== | === What should we do about digital sound-alikes? === | ||

Living people can defend<ref group="footnote" name="judiciary maybe not aware">Whether a suspect can defend against faked synthetic speech that sounds like him/her depends on how up-to-date the judiciary is. If no information and instructions about digital sound-alikes have been given to the judiciary, they likely will not believe the defense of denying that the recording is of the suspect's voice.</ref> themselves against digital sound-alike by denying the things the digital sound-alike says if they are presented to the target, but dead people cannot. Digital sound-alikes offer criminals new disinformation attack vectors and wreak havoc on provability. | |||

For these reasons the bannable '''raw materials''' i.e. covert voice models '''[[Law proposals to ban covert modeling|should be prohibited by law]]''' in order to protect humans from abuse by criminal parties. | |||

It is high time to act and to '''[[Law proposals to ban covert modeling|criminalize the covert modeling of human voice!]]''' | |||

== Text syntheses == | == Text syntheses == | ||

[[w:Chatbot]]s have existed for a longer time, but only now armed with AI they are becoming more deceiving. | [[w:Chatbot]]s and [[w:spamming]] have existed for a longer time, but only now armed with AI they are becoming more deceiving. | ||

In [[w:natural language processing]] development in [[w:natural-language understanding]] leads to more cunning [[w:natural-language generation]] AI. | In [[w:natural language processing]] development in [[w:natural-language understanding]] leads to more cunning [[w:natural-language generation]] AI. | ||

'''[[w:Large language model]]s''' ('''LLM''') are very large [[w:language model]]s consisting of a [[w:Artificial neural network|w:neural network]] with many parameters. | |||

[[w:OpenAI]]'s [[w:OpenAI#GPT|w:Generative Pre-trained Transformer]] ('''GPT''') is a left-to-right [[w:transformer (machine learning model)]]-based [[w:Natural-language generation|text generation]] model succeeded by [[w:OpenAI#GPT-2|w:GPT-2]] and [[w:OpenAI#GPT-3|w:GPT-3]] | [[w:OpenAI]]'s [[w:OpenAI#GPT|w:Generative Pre-trained Transformer]] ('''GPT''') is a left-to-right [[w:transformer (machine learning model)]]-based [[w:Natural-language generation|text generation]] model succeeded by [[w:OpenAI#GPT-2|w:GPT-2]] and [[w:OpenAI#GPT-3|w:GPT-3]] | ||

November 2022 saw the publication of OpenAI's '''[[w:ChatGPT]]''', a conversational artificial intelligence. | |||

'''[[w:Bard (chatbot)]]''' is a conversational [[w:generative artificial intelligence]] [[w:chatbot]] developed by [[w:Google]], based on the [[w:LaMDA]] family of [[w:large language models]]. It was developed as a direct response to the rise of [[w:OpenAI]]'s [[w:ChatGPT]], and was released in March 2023. ([https://en.wikipedia.org/w/index.php?title=Bard_(chatbot)&oldid=1152361586 Wikipedia]) | |||

''' Reporting / announcements ''' | ''' Reporting / announcements ''' (in reverse chronology) | ||

* [https://blogs.microsoft.com/blog/2023/02/07/reinventing-search-with-a-new-ai-powered-microsoft-bing-and-edge-your-copilot-for-the-web/ '''''Reinventing search with a new AI-powered Microsoft Bing and Edge, your copilot for the web''''' at blogs.microsoft.com] '''February 2023''' (2023-02-07). The new improved Bing, available only in Microsoft's Edge browser is reportedly based on a language model refined from GPT 3.5.<ref>https://www.theverge.com/2023/2/7/23587454/microsoft-bing-edge-chatgpt-ai</ref> | |||

* [https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text '''New AI classifier for indicating AI-written text''' at openai.com], a '''January 2023''' blog post about OpenAI's AI classifier for detecting AI-written texts. | |||

* [https://openai.com/blog/chatgpt '''''Introducing ChatGPT''''' at openai.com] '''November 2022''' (2022-11-30) | |||

* [https://www.technologyreview.com/2020/08/14/1006780/ai-gpt-3-fake-blog-reached-top-of-hacker-news/ ''''A college kid’s fake, AI-generated blog fooled tens of thousands. This is how he made it.'''' at technologyreview.com] '''August 2020''' reporting in the [[w:MIT Technology Review]] by Karen Hao about GPT-3. | * [https://www.technologyreview.com/2020/08/14/1006780/ai-gpt-3-fake-blog-reached-top-of-hacker-news/ ''''A college kid’s fake, AI-generated blog fooled tens of thousands. This is how he made it.'''' at technologyreview.com] '''August 2020''' reporting in the [[w:MIT Technology Review]] by Karen Hao about GPT-3. | ||

| Line 281: | Line 384: | ||

''' External links ''' | ''' External links ''' | ||

* [https://analyticssteps.com/blogs/detection-fake-and-false-news-text-analysis-approaches-and-cnn-deep-learning-model '''"Detection of Fake and False News (Text Analysis): Approaches and CNN as Deep Learning Model"''' at analyticsteps.com], a 2019 summmary written by Shubham Panth. | * [https://analyticssteps.com/blogs/detection-fake-and-false-news-text-analysis-approaches-and-cnn-deep-learning-model '''"Detection of Fake and False News (Text Analysis): Approaches and CNN as Deep Learning Model"''' at analyticsteps.com], a 2019 summmary written by Shubham Panth. | ||

=== Detectors for synthesized texts === | |||

Introduction of [[w:ChatGPT]] by OpenAI brought the need for software to detect machine-generated texts. | |||

Try AI plagiarism detection for free | |||

* [https://contentdetector.ai/ '''AI Content Detector''' at contentdetector.ai]- ''AI Content Detector - Detect ChatGPT Plagiarism'' ('''try for free''') | |||

* [https://platform.openai.com/ai-text-classifier '''AI Text Classifier''' at platform.openai.com]- ''The AI Text Classifier is a fine-tuned GPT model that predicts how likely it is that a piece of text was generated by AI from a variety of sources, such as ChatGPT.'' ('''free account required''') | |||

* [https://gptradar.com/ '''GPT Radar''' at gptradar.com] - ''AI text detector app'' ('''try for free''')<ref group="1st seen in" name="Wordlift.io 2023">https://wordlift.io/blog/en/best-plagiarism-checkers-for-ai-generated-content/</ref> | |||

* [https://gptzero.me/ '''GPTZero''' at gptzero.me] - ''The World's #1 AI Detector with over 1 Million Users'' ('''try for free''') | |||

* [https://copyleaks.com/plagiarism-checker '''Plagiarism Checker''' at copyleaks.com]- ''Plagiarism Checker by Copyleaks'' ('''try for free''')<ref group="1st seen in" name="Wordlift.io 2023" /> | |||

* https://gowinston.ai/ - ''The most powerful AI content detection solution'' ('''free-tier available''')<ref group="1st seen in" name="Wordlift.io 2023" /> | |||

* [https://www.zerogpt.com/ '''ZeroGPT''' at zerogpt.com]<ref group="1st seen in" name="Wordlift.io 2023" /> - ''GPT-4 And ChatGPT detector by ZeroGPT: detect OpenAI text - ZeroGPT the most Advanced and Reliable Chat GPT and GPT-4 detector tool'' ('''try for free''') | |||

For-a-fee AI plagiarism detection tools | |||

* https://originality.ai/ - ''The Most Accurate AI Content Detector and Plagiarism Checker Built for Serious Content Publishers''<ref group="1st seen in" name="Wordlift.io 2023" /> | |||

* https://www.turnitin.com/ - ''Empower students to do their best, original work''<ref group="1st seen in" name="Wordlift.io 2023" /> | |||

== Handwriting syntheses == | == Handwriting syntheses == | ||

| Line 290: | Line 411: | ||

If the handwriting-like synthesis passes human and media forensics testing, it is a '''digital handwrite-alike'''. | If the handwriting-like synthesis passes human and media forensics testing, it is a '''digital handwrite-alike'''. | ||

Here we find a '''risk''' similar to that which | Here we find a possible '''risk''' similar to that which became a reality, when the '''[[w:speaker recognition]] systems''' turned out to be instrumental in the development of '''[[#Digital sound-alikes|digital sound-alikes]]'''. After the knowledge needed to recognize a speaker was [[w:Transfer learning|w:transferred]] into a generative task in 2018 by Google researchers, we no longer cannot effectively determine for English speakers which recording is human in origin and which is from a machine origin. | ||

'''Handwriting-like syntheses''': | '''Handwriting-like syntheses''': | ||

| Line 326: | Line 447: | ||

* [https://github.com/topics/handwriting-recognition GitHub topic '''handwriting-recognition'''] contains 238 repositories as of September 2021. | * [https://github.com/topics/handwriting-recognition GitHub topic '''handwriting-recognition'''] contains 238 repositories as of September 2021. | ||

== | == Singing syntheses == | ||

As of 2020 the '''digital sing-alikes''' may not yet be here, but when we hear a faked singing voice and we cannot hear that it is fake, then we will know. An ability to sing does not seem to add much hostile capabilities compared to the ability to thieve spoken word. | |||

* [https://arxiv.org/abs/1910.11690 ''''''Fast and High-Quality Singing Voice Synthesis System based on Convolutional Neural Networks'''''' at arxiv.org], a 2019 singing voice synthesis technique using [[w:convolutional neural network|w:convolutional neural networks (CNN)]]. Accepted into the 2020 [[w:International Conference on Acoustics, Speech, and Signal Processing|International Conference on Acoustics, Speech, and Signal Processing (ICASSP)]]. | |||

* [http://compmus.ime.usp.br/sbcm/2019/papers/sbcm-2019-7.pdf ''''''State of art of real-time singing voice synthesis'''''' at compmus.ime.usp.br] presented at the 2019 [http://compmus.ime.usp.br/sbcm/2019/program/ 17th Brazilian Symposium on Computer Music] | |||

* [http://theses.fr/2017PA066511 ''''''Synthesis and expressive transformation of singing voice'''''' at theses.fr] [https://www.theses.fr/2017PA066511.pdf as .pdf] a 2017 doctorate thesis by [http://theses.fr/227185943 Luc Ardaillon] | |||

* [http://mtg.upf.edu/node/512 ''''''Synthesis of the Singing Voice by Performance Sampling and Spectral Models'''''' at mtg.upf.edu], a 2007 journal article in the [[w:IEEE Signal Processing Society]]'s Signal Processing Magazine | |||

* [https://www.researchgate.net/publication/4295714_Speech-to-Singing_Synthesis_Converting_Speaking_Voices_to_Singing_Voices_by_Controlling_Acoustic_Features_Unique_to_Singing_Voices ''''''Speech-to-Singing Synthesis: Converting Speaking Voices to Singing Voices by Controlling Acoustic Features Unique to Singing Voices'''''' at researchgate.net], a November 2007 paper published in the IEEE conference on Applications of Signal Processing to Audio and Acoustics | |||

* | * [[w:Category:Singing software synthesizers]] | ||

---- | |||

= Timeline of synthetic human-like fakes = | |||

See the #SSFWIKI '''[[Mediatheque]]''' for viewing media that is or is probably to do with synthetic human-like fakes. | |||

== 2020's synthetic human-like fakes == | |||

* '''2023''' | '''<font color="orange">Real-time digital look-and-sound-alike crime</font>''' | In April a man in northern China was defrauded of 4.3 million yuan by a criminal employing a digital look-and-sound-alike pretending to be his friend on a video call made with a stolen messaging service account.<ref name="Reuters real-time digital look-and-sound-alike crime 2023"/> | |||

* | * '''2023''' | '''<font color="orange">Election meddling with digital look-alikes</font>''' | The [[w:2023 Turkish presidential election]] saw numerous deepfake controversies. | ||

** "''Ahead of the election in Turkey, President Recep Tayyip Erdogan showed a video linking his main challenger Kemal Kilicdaroglu to the militant Kurdish organization PKK.''" [...] "''Research by DW's fact-checking team in cooperation with DW's Turkish service shows that the video at the campaign rally was '''manipulated''' by '''combining two separate videos''' with totally different backgrounds and content.''" [https://www.dw.com/en/fact-check-turkeys-erdogan-shows-false-kilicdaroglu-video/a-65554034 reports dw.com] | |||

* '''2023''' | March 7 th | '''<font color="red">science / demonstration</font>''' | Microsoft researchers submitted a paper for publication outlining their [https://arxiv.org/abs/2303.03926 '''Cross-lingual neural codec language modeling system''' at arxiv.org] dubbed [https://www.microsoft.com/en-us/research/project/vall-e-x/vall-e-x/ '''VALL-E X''' at microsoft.com], that extends upon VALL-E's capabilities to be cross-lingual and also maintaining the same "''emotional tone''" from sample to fake. | |||

</ | |||

* '''2023''' | January 5th | '''<font color="red">science / demonstration</font>''' | Microsoft researchers announced [https://www.microsoft.com/en-us/research/project/vall-e/ '''''VALL-E''''' - Neural Codec Language Models are Zero-Shot Text to Speech Synthesizers (at microsoft.com)] that is able to thieve a voice from only '''3 seconds of sample''' and it is also able to mimic the "''emotional tone''" of the sample the synthesis if produced of.<ref> | |||

{{cite web | {{cite web | ||

| url = https://arstechnica.com/information-technology/2023/01/microsofts-new-ai-can-simulate-anyones-voice-with-3-seconds-of-audio/ | |||

| title = Microsoft’s new AI can simulate anyone’s voice with 3 seconds of audio | |||

| last = Edwards | |||

| first = Benj | |||

| date = 2023-01-10 | |||

| website = [[w:Arstechnica.com]] | |||

| publisher = Arstechnica | |||

| access-date = 2023-05-05 | |||

| quote = For the paper's conclusion, they write: "Since VALL-E could synthesize speech that maintains speaker identity, it may carry potential risks in misuse of the model, such as spoofing voice identification or impersonating a specific speaker. To mitigate such risks, it is possible to build a detection model to discriminate whether an audio clip was synthesized by VALL-E. We will also put Microsoft AI Principles into practice when further developing the models." | |||

}} | |||

</ref> | </ref> | ||

* | * '''2023''' | January 1st | '''<font color="green">Law</font>''' | {{#lst:Law on sexual offences in Finland 2023|what-is-it}} | ||

* ''' | * '''2022''' | <font color="orange">'''science'''</font> and <font color="green">'''demonstration'''</font> | [[w:OpenAI]][https://openai.com/ (.com)] published [[w:ChatGPT]], a discutational AI accessible with a free account at [https://chat.openai.com/ chat.openai.com]. Initial version was published on 2022-11-30. | ||

* '''2022''' | '''<font color="green">brief report of counter-measures</font>''' | {{#lst:Protecting world leaders against deep fakes using facial, gestural, and vocal mannerisms|what-is-it}} Publication date 2022-11-23. | |||

* | |||

* '''2022''' | '''<font color="green">counter-measure</font>''' | {{#lst:Detecting deep-fake audio through vocal tract reconstruction|what-is-it}} | |||

* | :{{#lst:Detecting deep-fake audio through vocal tract reconstruction|original-reporting}}. Presented to peers in August 2022 and to the general public in September 2022. | ||

* '''2022''' | <font color="orange">'''disinformation attack'''</font> | In June 2022 a fake digital look-and-sound-alike in the appearance and voice of [[w:Vitali Klitschko]], mayor of [[w:Kyiv]], held fake video phone calls with several European mayors. The Germans determined that the video phone call was fake by contacting the Ukrainian officials. This attempt at covert disinformation attack was originally reported by [[w:Der Spiegel]].<ref>https://www.theguardian.com/world/2022/jun/25/european-leaders-deepfake-video-calls-mayor-of-kyiv-vitali-klitschko</ref><ref>https://www.dw.com/en/vitali-klitschko-fake-tricks-berlin-mayor-in-video-call/a-62257289</ref> | |||

* | |||

* '''2022''' | science | [[w:DALL-E]] 2, a successor designed to generate more realistic images at higher resolutions that "can combine concepts, attributes, and styles" was published in April 2022.<ref>{{Cite web |title=DALL·E 2 |url=https://openai.com/dall-e-2/ |access-date=2023-04-22 |website=OpenAI |language=en-US}}</ref> ([https://en.wikipedia.org/w/index.php?title=DALL-E&oldid=1151136107 Wikipedia]) | |||

* https:// | |||

* | * '''2022''' | '''<font color="green">counter-measure</font>''' | {{#lst:Protecting President Zelenskyy against deep fakes|what-is-it}} Preprint published in February 2022 and submitted to [[w:arXiv]] in June 2022 | ||

''' | * '''2022''' | '''<font color="green">science / review of counter-measures</font>''' | [https://www.mdpi.com/1999-4893/15/5/155 ''''''A Review of Modern Audio Deepfake Detection Methods: Challenges and Future Directions'''''' at mdpi.com]<ref name="Audio Deepfake detection review 2022"> | ||

{{cite journal | |||

| last1 = Almutairi | |||

| first1 = Zaynab | |||

| last2 = Elgibreen | |||

| first2 = Hebah | |||

| date = 2022-05-04 | |||

| title = A Review of Modern Audio Deepfake Detection Methods: Challenges and Future Directions | |||

| url = https://www.mdpi.com/1999-4893/15/5/155 | |||

| journal = [[w:Algorithms (journal)]] | |||

| volume = | |||

| issue = | |||

| pages = | |||

| doi = https://doi.org/10.3390/a15050155 | |||

| access-date = 2022-10-18 | |||

}} | |||

= | |||

< | </ref>, a review of audio deepfake detection methods by researchers Zaynab Almutairi and Hebah Elgibreen of the [[w:King Saud University]], Saudi Arabia published in [[w:Algorithms (journal)]] on Wednesday 2022-05-04 published by the [[w:MDPI]] (Multidisciplinary Digital Publishing Institute). This article belongs to the Special Issue [https://www.mdpi.com/journal/algorithms/special_issues/Adversarial_Federated_Machine_Learning ''Commemorative Special Issue: Adversarial and Federated Machine Learning: State of the Art and New Perspectives'' at mdpi.com] | ||

* '''2022''' | '''<font color="green">science / counter-measure</font>''' | [https://arxiv.org/abs/2203.15563 ''''''Attacker Attribution of Audio Deepfakes'''''' at arxiv.org], a pre-print was presented at the [https://www.interspeech2022.org/ Interspeech 2022 conference] organized by [[w:International Speech Communication Association]] in Korea September 18-22 2022. | |||

* '''2021''' | Science and demonstration | In the NeurIPS 2021 held virtually in December researchers from Nvidia and [[w:Aalto University]] present their paper [https://nvlabs.github.io/stylegan3/ '''''Alias-Free Generative Adversarial Networks (StyleGAN3)''''' at nvlabs.github.io] and associated [https://github.com/NVlabs/stylegan3 implementation] in [[w:PyTorch]] and the results are deceivingly human-like in appearance. [https://nvlabs-fi-cdn.nvidia.com/stylegan3/stylegan3-paper.pdf StyleGAN3 paper as .pdf at nvlabs-fi-cdn.nvidia.com] | * '''2021''' | Science and demonstration | In the NeurIPS 2021 held virtually in December researchers from Nvidia and [[w:Aalto University]] present their paper [https://nvlabs.github.io/stylegan3/ '''''Alias-Free Generative Adversarial Networks (StyleGAN3)''''' at nvlabs.github.io] and associated [https://github.com/NVlabs/stylegan3 implementation] in [[w:PyTorch]] and the results are deceivingly human-like in appearance. [https://nvlabs-fi-cdn.nvidia.com/stylegan3/stylegan3-paper.pdf StyleGAN3 paper as .pdf at nvlabs-fi-cdn.nvidia.com] | ||

| Line 813: | Line 536: | ||

|last=Rosner | |last=Rosner | ||

|first=Helen | |first=Helen | ||

|author-link=Helen Rosner | |author-link=[[w:Helen Rosner]] | ||

|date=2021-07-15 | |date=2021-07-15 | ||

|title=A Haunting New Documentary About Anthony Bourdain | |title=A Haunting New Documentary About Anthony Bourdain | ||

| Line 830: | Line 553: | ||

* '''2021''' | '''<font color="red">crime / fraud</font>''' | {{#lst:Synthetic human-like fakes|2021 digital sound-alike enabled fraud}} | * '''2021''' | '''<font color="red">crime / fraud</font>''' | {{#lst:Synthetic human-like fakes|2021 digital sound-alike enabled fraud}} | ||

* '''<font color="green">2020</font>''' | '''<font color="green">counter-measure</font>''' | | |||

* '''2021''' | science and demonstration | '''DALL-E''', a [[w:deep learning]] model developed by [[w:OpenAI]] to generate digital images from [[w:natural language]] descriptions, called "prompts" was published in January 2021. DALL-E uses a version of [[w:GPT-3]] modified to generate images. (Adapted from [https://en.wikipedia.org/w/index.php?title=DALL-E&oldid=1151136107 Wikipedia]) | |||

* '''<font color="green">2020</font>''' | '''<font color="green">counter-measure</font>''' | The [https://incidentdatabase.ai/ ''''''AI Incident Database'''''' at incidentdatabase.ai] was introduced on 2020-11-18 by the [[w:Partnership on AI]].<ref name="PartnershipOnAI2020">https://www.partnershiponai.org/aiincidentdatabase/</ref> | |||

[[File:Appearance of Queen Elizabeth II stolen by Channel 4 in Dec 2020 (screenshot at 191s).png|thumb|right|480px|In Dec 2020 Channel 4 aired a Queen-like fake i.e. they had thieved the appearance of Queen Elizabeth II using deepfake methods.]] | |||

* '''2020''' | '''Controversy''' / '''Public service announcement''' | Channel 4 thieved the appearance of Queen Elizabeth II using deepfake methods. The product of synthetic human-like fakery originally aired on Channel 4 on 25 December at 15:25 GMT.<ref name="Queen-like deepfake 2020 BBC reporting">https://www.bbc.com/news/technology-55424730</ref> [https://www.youtube.com/watch?v=IvY-Abd2FfM&t=3s View in YouTube] | |||

* '''2020''' | reporting | [https://www.wired.co.uk/article/deepfake-porn-websites-videos-law "''Deepfake porn is now mainstream. And major sites are cashing in''" at wired.co.uk] by Matt Burgess. Published August 2020. | * '''2020''' | reporting | [https://www.wired.co.uk/article/deepfake-porn-websites-videos-law "''Deepfake porn is now mainstream. And major sites are cashing in''" at wired.co.uk] by Matt Burgess. Published August 2020. | ||

| Line 837: | Line 567: | ||

** [https://www.cnet.com/news/mit-releases-deepfake-video-of-nixon-announcing-nasa-apollo-11-disaster/ Cnet.com July 2020 reporting ''MIT releases deepfake video of 'Nixon' announcing NASA Apollo 11 disaster''] | ** [https://www.cnet.com/news/mit-releases-deepfake-video-of-nixon-announcing-nasa-apollo-11-disaster/ Cnet.com July 2020 reporting ''MIT releases deepfake video of 'Nixon' announcing NASA Apollo 11 disaster''] | ||

* '''2020''' | US state law | {{#lst: | * '''2020''' | US state law | {{#lst:Laws against synthesis and other related crimes|California2020}} | ||

* '''2020''' | Chinese legislation | {{#lst: | * '''2020''' | Chinese legislation | {{#lst:Laws against synthesis and other related crimes|China2020}} | ||

== 2010's synthetic human-like fakes == | |||

* '''2019''' | science and demonstration | At the December 2019 NeurIPS conference, a novel method for making animated fakes of anything with AI [https://aliaksandrsiarohin.github.io/first-order-model-website/ '''''First Order Motion Model for Image Animation''''' (website at aliaksandrsiarohin.github.io)], [https://proceedings.neurips.cc/paper/2019/file/31c0b36aef265d9221af80872ceb62f9-Paper.pdf (paper)] [https://github.com/AliaksandrSiarohin/first-order-model (github)] was presented.<ref group="1st seen in">https://www.technologyreview.com/2020/08/28/1007746/ai-deepfakes-memes/</ref> | * '''2019''' | science and demonstration | At the December 2019 NeurIPS conference, a novel method for making animated fakes of anything with AI [https://aliaksandrsiarohin.github.io/first-order-model-website/ '''''First Order Motion Model for Image Animation''''' (website at aliaksandrsiarohin.github.io)], [https://proceedings.neurips.cc/paper/2019/file/31c0b36aef265d9221af80872ceb62f9-Paper.pdf (paper)] [https://github.com/AliaksandrSiarohin/first-order-model (github)] was presented.<ref group="1st seen in">https://www.technologyreview.com/2020/08/28/1007746/ai-deepfakes-memes/</ref> | ||

** Reporting [https://www.technologyreview.com/2020/08/28/1007746/ai-deepfakes-memes/ '''''Memers are making deepfakes, and things are getting weird''''' at technologyreview.com], 2020-08-28 by Karen Hao. | ** Reporting [https://www.technologyreview.com/2020/08/28/1007746/ai-deepfakes-memes/ '''''Memers are making deepfakes, and things are getting weird''''' at technologyreview.com], 2020-08-28 by Karen Hao. | ||

* '''2019''' | demonstration | In September 2019 [[w:Yle]], the Finnish [[w:public broadcasting company]], aired a result of experimental [[w:journalism]], [https://yle.fi/uutiset/3-10955498 '''a deepfake of the President in office'''] [[w:Sauli Niinistö]] in its main news broadcast for the purpose of highlighting the advancing disinformation technology and problems that arise from it. | * '''2019''' | demonstration | In September 2019 [[w:Yle]], the Finnish [[w:public broadcasting company]], aired a result of experimental [[w:journalism]], [https://yle.fi/uutiset/3-10955498 '''a deepfake of the President in office'''] [[w:Sauli Niinistö]] in its main news broadcast for the purpose of highlighting the advancing disinformation technology and problems that arise from it. | ||

* '''2019''' | US state law | {{#lst: | * '''2019''' | US state law | {{#lst:Laws against synthesis and other related crimes|Texas2019}} | ||

* '''2019''' | US state law | {{#lst: | * '''2019''' | US state law | {{#lst:Laws against synthesis and other related crimes|Virginia2019}} | ||

* '''2019''' | Science | [https://arxiv.org/pdf/1809.10460.pdf '''''Sample Efficient Adaptive Text-to-Speech''''' .pdf at arxiv.org], a 2019 paper from Google researchers, published as a conference paper at [[w:International Conference on Learning Representations]] (ICLR)<ref group="1st seen in" name="ConnectedPapers suggestion on Google Transfer learning 2018"> https://www.connectedpapers.com/main/8fc09dfcff78ac9057ff0834a83d23eb38ca198a/Transfer-Learning-from-Speaker-Verification-to-Multispeaker-TextToSpeech-Synthesis/graph</ref> | * '''2019''' | Science | [https://arxiv.org/pdf/1809.10460.pdf '''''Sample Efficient Adaptive Text-to-Speech''''' .pdf at arxiv.org], a 2019 paper from Google researchers, published as a conference paper at [[w:International Conference on Learning Representations]] (ICLR)<ref group="1st seen in" name="ConnectedPapers suggestion on Google Transfer learning 2018"> https://www.connectedpapers.com/main/8fc09dfcff78ac9057ff0834a83d23eb38ca198a/Transfer-Learning-from-Speaker-Verification-to-Multispeaker-TextToSpeech-Synthesis/graph</ref> | ||

| Line 986: | Line 716: | ||

* '''2013''' | demonstration | A '''[https://ict.usc.edu/pubs/Scanning%20and%20Printing%20a%203D%20Portrait%20of%20President%20Barack%20Obama.pdf 'Scanning and Printing a 3D Portrait of President Barack Obama' at ict.usc.edu]'''. A 7D model and a 3D bust was made of President Obama with his consent. Relevancy: <font color="green">'''Relevancy: certain'''</font> | * '''2013''' | demonstration | A '''[https://ict.usc.edu/pubs/Scanning%20and%20Printing%20a%203D%20Portrait%20of%20President%20Barack%20Obama.pdf 'Scanning and Printing a 3D Portrait of President Barack Obama' at ict.usc.edu]'''. A 7D model and a 3D bust was made of President Obama with his consent. Relevancy: <font color="green">'''Relevancy: certain'''</font> | ||

=== 2000's synthetic human-like fakes | * '''2011''' | <font color="green">'''Law in Finland'''</font> | Distribution and attempt of distribution and also possession of '''synthetic [[w:Child sexual abuse material|CSAM]]''' was '''criminalized''' on Wednesday 2011-06-01, upon the initiative of the [[w:Vanhanen II Cabinet]]. These protections against CSAM were moved into 19 §, 20 § and 21 § of Chapter 20 when the [[Law on sexual offences in Finland 2023]] was improved and gathered into Chapter 20 upon the initiative of the [[w:Marin Cabinet]]. | ||

== 2000's synthetic human-like fakes == | |||

* '''2010''' | movie | [[w:Walt Disney Pictures]] released a sci-fi sequel entitled ''[[w:Tron: Legacy]]'' with a digitally rejuvenated digital look-alike made of the actor [[w:Jeff Bridges]] playing the [[w:antagonist]] [[w:List of Tron characters#CLU|w:CLU]]. | * '''2010''' | movie | [[w:Walt Disney Pictures]] released a sci-fi sequel entitled ''[[w:Tron: Legacy]]'' with a digitally rejuvenated digital look-alike made of the actor [[w:Jeff Bridges]] playing the [[w:antagonist]] [[w:List of Tron characters#CLU|w:CLU]]. | ||

| Line 1,021: | Line 753: | ||

* '''2002''' | music video | '''[https://www.youtube.com/watch?v=3qIXIHAmcKU 'Bullet' by Covenant on Youtube]''' by [[w:Covenant (band)]] from their album [[w:Northern Light (Covenant album)]]. Relevancy: Contains the best upper-torso digital look-alike of Eskil Simonsson (vocalist) that their organization could procure at the time. Here you can observe the '''classic "''skin looks like cardboard''"-bug''' (assuming this was not intended) that '''thwarted efforts to''' make digital look-alikes that '''pass human testing''' before the '''reflectance capture and dissection in 1999''' by [[w:Paul Debevec]] et al. at the [[w:University of Southern California]] and subsequent development of the '''"Analytical [[w:bidirectional reflectance distribution function|w:BRDF]]"''' (quote-unquote) by ESC Entertainment, a company set up for the '''sole purpose''' of '''making the cinematography''' for the 2003 films Matrix Reloaded and Matrix Revolutions '''possible''', lead by George Borshukov. | * '''2002''' | music video | '''[https://www.youtube.com/watch?v=3qIXIHAmcKU 'Bullet' by Covenant on Youtube]''' by [[w:Covenant (band)]] from their album [[w:Northern Light (Covenant album)]]. Relevancy: Contains the best upper-torso digital look-alike of Eskil Simonsson (vocalist) that their organization could procure at the time. Here you can observe the '''classic "''skin looks like cardboard''"-bug''' (assuming this was not intended) that '''thwarted efforts to''' make digital look-alikes that '''pass human testing''' before the '''reflectance capture and dissection in 1999''' by [[w:Paul Debevec]] et al. at the [[w:University of Southern California]] and subsequent development of the '''"Analytical [[w:bidirectional reflectance distribution function|w:BRDF]]"''' (quote-unquote) by ESC Entertainment, a company set up for the '''sole purpose''' of '''making the cinematography''' for the 2003 films Matrix Reloaded and Matrix Revolutions '''possible''', lead by George Borshukov. | ||

== 1990's synthetic human-like fakes == | |||

[[File:Institute for Creative Technologies (logo).jpg|thumb|left|156px|Logo of the '''[[w:Institute for Creative Technologies]]''' founded in 1999 in the [[w:University of Southern California]] by the [[w:United States Army]]]] | [[File:Institute for Creative Technologies (logo).jpg|thumb|left|156px|Logo of the '''[[w:Institute for Creative Technologies]]''' founded in 1999 in the [[w:University of Southern California]] by the [[w:United States Army]]]] | ||

| Line 1,028: | Line 760: | ||

* <font color="red">'''1999'''</font> | <font color="red">'''institute founded'''</font> | The '''[[w:Institute for Creative Technologies]]''' was founded by the [[w:United States Army]] in the [[w:University of Southern California]]. It collaborates with the [[w:United States Army Futures Command]], [[w:United States Army Combat Capabilities Development Command]], [[w:Combat Capabilities Development Command Soldier Center]] and [[w:United States Army Research Laboratory]].<ref name="ICT-about">https://ict.usc.edu/about/</ref>. In 2016 [[w:Hao Li]] was appointed to direct the institute. | * <font color="red">'''1999'''</font> | <font color="red">'''institute founded'''</font> | The '''[[w:Institute for Creative Technologies]]''' was founded by the [[w:United States Army]] in the [[w:University of Southern California]]. It collaborates with the [[w:United States Army Futures Command]], [[w:United States Army Combat Capabilities Development Command]], [[w:Combat Capabilities Development Command Soldier Center]] and [[w:United States Army Research Laboratory]].<ref name="ICT-about">https://ict.usc.edu/about/</ref>. In 2016 [[w:Hao Li]] was appointed to direct the institute. | ||

* '''1997''' | '''technology / science''' | [https://www2.eecs.berkeley.edu/Research/Projects/CS/vision/human/bregler-sig97.pdf ''''Video rewrite: Driving visual speech with audio'''' at www2.eecs.berkeley.edu]<ref name="Bregler1997" /><ref group="1st seen in" name="Bohacek-Farid-2022"> | |||

PROTECTING PRESIDENT ZELENSKYY AGAINST DEEP FAKES https://arxiv.org/pdf/2206.12043.pdf | |||

</ref> Christoph Breigler, Michelle Covell and Malcom Slaney presented their work at the ACM SIGGRAPH 1997. [https://www.dropbox.com/sh/s4l00z7z4gn7bvo/AAAP5oekFqoelnfZYjS8NQyca?dl=0 Download video evidence of ''Video rewrite: Driving visual speech with audio'' Bregler et al 1997 from dropbox.com], [http://chris.bregler.com/videorewrite/ view author's site at chris.bregler.com], [https://dl.acm.org/doi/10.1145/258734.258880 paper at dl.acm.org] [https://www.researchgate.net/publication/220720338_Video_Rewrite_Driving_Visual_Speech_with_Audio paper at researchgate.net] | |||

* '''1994''' | movie | [[w:The Crow (1994 film)]] was the first film production to make use of [[w:digital compositing]] of a computer simulated representation of a face onto scenes filmed using a [[w:body double]]. Necessity was the muse as the actor [[w:Brandon Lee]] portraying the protagonist was tragically killed accidentally on-stage. | * '''1994''' | movie | [[w:The Crow (1994 film)]] was the first film production to make use of [[w:digital compositing]] of a computer simulated representation of a face onto scenes filmed using a [[w:body double]]. Necessity was the muse as the actor [[w:Brandon Lee]] portraying the protagonist was tragically killed accidentally on-stage. | ||

== 1970's synthetic human-like fakes == | |||

{{#ev:vimeo|16292363|480px|right|''[[w:A Computer Animated Hand|w:A Computer Animated Hand]]'' is a 1972 short film by [[w:Edwin Catmull]] and [[w:Fred Parke]]. This was the first time that [[w:computer-generated imagery]] was used in film to animate likenesses of moving human appearance.}} | {{#ev:vimeo|16292363|480px|right|''[[w:A Computer Animated Hand|w:A Computer Animated Hand]]'' is a 1972 short film by [[w:Edwin Catmull]] and [[w:Fred Parke]]. This was the first time that [[w:computer-generated imagery]] was used in film to animate likenesses of moving human appearance.}} | ||

| Line 1,041: | Line 779: | ||

* '''1971''' | science | '''[https://interstices.info/images-de-synthese-palme-de-la-longevite-pour-lombrage-de-gouraud/ 'Images de synthèse : palme de la longévité pour l’ombrage de Gouraud' (still photos)]'''. [[w:Henri Gouraud (computer scientist)]] made the first [[w:Computer graphics]] [[w:geometry]] [[w:digitization]] and representation of a human face. Modeling was his wife Sylvie Gouraud. The 3D model was a simple [[w:wire-frame model]] and he applied [[w:Gouraud shading]] to produce the '''first known representation''' of '''human-likeness''' on computer. <ref>{{cite web|title=Images de synthèse : palme de la longévité pour l'ombrage de Gouraud|url=http://interstices.info/jcms/c_25256/images-de-synthese-palme-de-la-longevite-pour-lombrage-de-gouraud}}</ref> | * '''1971''' | science | '''[https://interstices.info/images-de-synthese-palme-de-la-longevite-pour-lombrage-de-gouraud/ 'Images de synthèse : palme de la longévité pour l’ombrage de Gouraud' (still photos)]'''. [[w:Henri Gouraud (computer scientist)]] made the first [[w:Computer graphics]] [[w:geometry]] [[w:digitization]] and representation of a human face. Modeling was his wife Sylvie Gouraud. The 3D model was a simple [[w:wire-frame model]] and he applied [[w:Gouraud shading]] to produce the '''first known representation''' of '''human-likeness''' on computer. <ref>{{cite web|title=Images de synthèse : palme de la longévité pour l'ombrage de Gouraud|url=http://interstices.info/jcms/c_25256/images-de-synthese-palme-de-la-longevite-pour-lombrage-de-gouraud}}</ref> | ||

== 1960's synthetic human-like fakes == | |||

* '''1961''' | demonstration | The first singing by a computer was performed by an [[w:IBM 704]] and the song was [[w:Daisy Bell]], written in 1892 by British songwriter [[w:Harry Dacre]]. Go to [[Mediatheque#1961]] to view. | * '''1961''' | demonstration | The first singing by a computer was performed by an [[w:IBM 704]] and the song was [[w:Daisy Bell]], written in 1892 by British songwriter [[w:Harry Dacre]]. Go to [[Mediatheque#1961]] to view. | ||

== 1930's synthetic human-like fakes == | |||

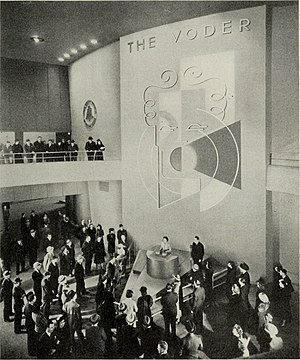

[[File:Homer Dudley (October 1940). "The Carrier Nature of Speech". Bell System Technical Journal, XIX(4);495-515. -- Fig.5 The voder being demonstrated at the New York World's Fair.jpg|thumb|left|300px|'''[[w:Voder]]''' demonstration pavillion at the [[w:1939 New York World's Fair]]]] | [[File:Homer Dudley (October 1940). "The Carrier Nature of Speech". Bell System Technical Journal, XIX(4);495-515. -- Fig.5 The voder being demonstrated at the New York World's Fair.jpg|thumb|left|300px|'''[[w:Voder]]''' demonstration pavillion at the [[w:1939 New York World's Fair]]]] | ||

* '''1939''' | demonstration | '''[[w:Voder]]''' (''Voice Operating Demonstrator'') from the [[w:Bell Labs|w:Bell Telephone Laboratory]] was the first time that [[w:speech synthesis]] was done electronically by breaking it down into its acoustic components. It was invented by [[w:Homer Dudley]] in 1937–1938 and developed on his earlier work on the [[w:vocoder]]. (Wikipedia) | * '''1939''' | demonstration | '''[[w:Voder]]''' (''Voice Operating Demonstrator'') from the [[w:Bell Labs|w:Bell Telephone Laboratory]] was the first time that [[w:speech synthesis]] was done electronically by breaking it down into its acoustic components. It was invented by [[w:Homer Dudley]] in 1937–1938 and developed on his earlier work on the [[w:vocoder]]. (Wikipedia) | ||

== 1770's synthetic human-like fakes == | |||

[[File:Kempelen Speakingmachine.JPG|right|thumb|300px|A replica of [[w:Wolfgang von Kempelen]]'s [[w:Wolfgang von Kempelen's Speaking Machine]], built 2007–09 at the Department of [[w:Phonetics]], [[w:Saarland University]], [[w:Saarbrücken]], Germany. This machine added models of the tongue and lips, enabling it to produce [[w:consonant]]s as well as [[w:vowel]]s]] | [[File:Kempelen Speakingmachine.JPG|right|thumb|300px|A replica of [[w:Wolfgang von Kempelen]]'s [[w:Wolfgang von Kempelen's Speaking Machine]], built 2007–09 at the Department of [[w:Phonetics]], [[w:Saarland University]], [[w:Saarbrücken]], Germany. This machine added models of the tongue and lips, enabling it to produce [[w:consonant]]s as well as [[w:vowel]]s]] | ||

| Line 1,062: | Line 800: | ||

---- | ---- | ||

= Footnotes = | |||

<references group="footnote" /> | <references group="footnote" /> | ||

== Contact information of organizations == | == Contact information of organizations == | ||

Please contact these organizations and tell them to work harder against the disinformation weapons | Please contact [[Organizations, studies and events against synthetic human-like fakes|these organizations]] and tell them to work harder against the disinformation weapons | ||

= 1st seen in = | |||

<references group="1st seen in" /> | <references group="1st seen in" /> | ||

= References = | |||

<references /> | <references /> | ||

Latest revision as of 21:00, 19 October 2024

Definitions

When the camera does not exist, but the subject being imaged with a simulation of a (movie) camera deceives the watcher to believe it is some living or dead person it is a digital look-alike.

In 2017-2018 this started to be referred to as w:deepfake, even though altering video footage of humans with a computer with a deceiving effect is actually 20 yrs older than the name "deep fakes" or "deepfakes".[1][2]

When it cannot be determined by human testing or media forensics whether some fake voice is a synthetic fake of some person's voice, or is it an actual recording made of that person's actual real voice, it is a pre-recorded digital sound-alike. This is now commonly referred to as w:audio deepfake.

Real-time digital look-and-sound-alike in a video call was used to defraud a substantial amount of money in 2023.[3]

- Read more about synthetic human-like fakes, see and support organizations and events against synthetic human-like fakes and what they are doing, what kinds of Laws against synthesis and other related crimes have been formulated, examine the SSFWIKI timeline of synthetic human-like fakes or view the Mediatheque.

Click on the picture or Obama's appearance thieved - a public service announcement digital look-alike by Monkeypaw Productions and Buzzfeed to view an April 2018 public service announcement moving digital look-alike made to appear Obama-like. The video is accompanied with imitator sound-alike, and was made by w:Monkeypaw Productions (.com) in conjunction with w:BuzzFeed (.com). You can also View the same video at YouTube.com.[4]

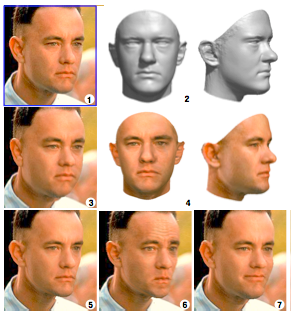

(1) Sculpting a morphable model to one single picture

(2) Produces 3D approximation

(4) Texture capture

(3) The 3D model is rendered back to the image with weight gain

(5) With weight loss

(6) Looking annoyed

(7) Forced to smile Image 2 by Blanz and Vettel – Copyright ACM 1999 – http://dl.acm.org/citation.cfm?doid=311535.311556 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

Digital look-alikes[edit | edit source]

Introduction to digital look-alikes[edit | edit source]

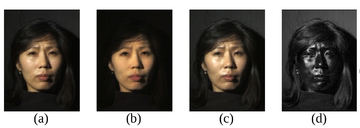

(a) Normal image in dot lighting

(b) Image of the diffuse reflection which is caught by placing a vertical polarizer in front of the light source and a horizontal in the front the camera

(c) Image of the highlight specular reflection which is caught by placing both polarizers vertically

(d) Subtraction of c from b, which yields the specular component

Images are scaled to seem to be the same luminosity.

Original image by Debevec et al. – Copyright ACM 2000 – https://dl.acm.org/citation.cfm?doid=311779.344855 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

Original picture by w:Paul Debevec et al. - Copyright ACM 2000 https://dl.acm.org/citation.cfm?doid=311779.344855

In the cinemas we have seen digital look-alikes for over 20 years. These digital look-alikes have "clothing" (a simulation of clothing is not clothing) or "superhero costumes" and "superbaddie costumes", and they don't need to care about the laws of physics, let alone laws of physiology. It is generally accepted that digital look-alikes made their public debut in the sequels of The Matrix i.e. w:The Matrix Reloaded and w:The Matrix Revolutions released in 2003. It can be considered almost certain, that it was not possible to make these before the year 1999, as the final piece of the puzzle to make a (still) digital look-alike that passes human testing, the reflectance capture over the human face, was made for the first time in 1999 at the w:University of Southern California and was presented to the crème de la crème of the computer graphics field in their annual gathering SIGGRAPH 2000.[5]

“Do you think that was w:Hugo Weaving's left cheekbone that w:Keanu Reeves punched in with his right fist?”

The problems with digital look-alikes[edit | edit source]

Extremely unfortunately for the humankind, organized criminal leagues, that posses the weapons capability of making believable looking synthetic pornography, are producing on industrial production pipelines terroristic synthetic pornography[footnote 1] by animating digital look-alikes and distributing it in the murky Internet in exchange for money stacks that are getting thinner and thinner as time goes by.

These industrially produced pornographic delusions are causing great human suffering, especially in their direct victims, but they are also tearing our communities and societies apart, sowing blind rage, perceptions of deepening chaos, feelings of powerlessness and provoke violence.

These kinds of hate illustration increases and strengthens hate feeling, hate thinking, hate speech and hate crimes and tears our fragile social constructions apart and with time perverts humankind's view of humankind into an almost unrecognizable shape, unless we interfere with resolve.

Children-like sexual abuse images

Sadly by 2023 there is a market for synthetic human-like sexual abuse material that looks like children. See 'Illegal trade in AI child sex abuse images exposed' at bbc.com 2023-06-28 reports w:Stable Diffusion being abused to produce this kind of images. The w:Internet Watch Foundation also reports on the alarming existence of production of synthetic human-like sex abuse material portraying minors. See 'Prime Minister must act on threat of AI as IWF ‘sounds alarm’ on first confirmed AI-generated images of child sexual abuse' at iwf.org.uk (2023-08-18)

Fixing the problems from digital look-alikes[edit | edit source]

We need to move on 3 fields: legal, technological and cultural.

Technological: Computer vision system like FacePinPoint.com for seeking unauthorized pornography / nudes used to exist 2017-2021 and could be revived if funding is found. It was a service practically identical with SSFWIKI original concept Adequate Porn Watcher AI (concept).

Legal: Legislators around the planet have been waking up to this reality that not everything that seems a video of people is a video of people and various laws have been passed to protect humans and humanity from the menaces of synthetic human-like fakes, mostly digital look-alikes so far, but hopefully humans will be protected also fro other aspects of synthetic human-like fakes by laws. See Laws against synthesis and other related crimes

Age analysis and rejuvenating and aging syntheses[edit | edit source]

- 'An Overview of Two Age Synthesis and Estimation Techniques' at arxiv.org (.pdf), submitted for review on 2020-01-26

- 'Dual Reference Age Synthesis' at sciencedirect.com (preprint at arxiv.org) published on 2020-10-21 in w:Neurocomputing (journal)

- 'A simple automatic facial aging/rejuvenating synthesis method' at ieeexplore.ieee.org read free at researchgate.net, published at the proceedings of the 2011 IEEE International Conference on Systems, Man and Cybernetics

- 'Age Synthesis and Estimation via Faces: A Survey' at ieeexplore.ieee.org (paywall) at researchgate.net published November 2010

Temporal limit of digital look-alikes[edit | edit source]

w:History of film technology has information about where the border is.

Digital look-alikes cannot be used to attack people who existed before the technological invention of film. For moving pictures the breakthrough is attributed to w:Auguste and Louis Lumière's w:Cinematograph premiered in Paris on 28 December 1895, though this was only the commercial and popular breakthrough, as even earlier moving pictures exist. (adapted from w:History of film)

The w:Kinetoscope is an even earlier motion picture exhibition device. A prototype for the Kinetoscope was shown to a convention of the National Federation of Women's Clubs on May 20, 1891.[6] The first public demonstration of the Kinetoscope was held at the Brooklyn Institute of Arts and Sciences on May 9, 1893. (Wikipedia)[6]

Digital sound-alikes[edit | edit source]

University of Florida published an antidote to synthetic human-like fake voices in 2022[edit | edit source]

2022 saw a brilliant counter-measure presented to peers at the 31st w:USENIX Security Symposium 10-12 August 2022 by w:University of Florida Detecting deep-fake audio through vocal tract reconstruction.

The university's foundation has applied for a patent and let us hope that they will w:copyleft the patent as this protective method needs to be rolled out to protect the humanity.

Below transcluded from the article

Detecting deep-fake audio through vocal tract reconstruction is an epic scientific work, against fake human-like voices, from the w:University of Florida in published to peers in August 2022.

The work Who Are You (I Really Wanna Know)? Detecting Audio DeepFakes Through Vocal Tract Reconstruction at usenix.org, presentation page, version included in the proceedings[7] and slides from researchers of the Florida Institute for Cybersecurity Research (FICS) at fics.institute.ufl.edu in the w:University of Florida received funding from the w:Office of Naval Research and was presented on 2022-08-11 at the 31st w:USENIX Security Symposium.

This work was done by PhD student Logan Blue, Kevin Warren, Hadi Abdullah, Cassidy Gibson, Luis Vargas, Jessica O’Dell, Kevin Butler and Professor Patrick Traynor.

The University of Florida Research Foundation Inc has filed for and received an US patent titled 'Detecting deep-fake audio through vocal tract reconstruction' registration number US20220036904A1 (link to patents.google.com) with 20 claims. The patent application was published on Thursday 2022-02-03. The patent application was approved on 2023-07-04 and has an adjusted expiration date of Sunday 2041-12-29. PhD student Logan Blue and professor Patrick Traynor wrote an article for the general public on the work titled Deepfake audio has a tell – researchers use fluid dynamics to spot artificial imposter voices at theconversation.com[8] that was published Tuesday 2022-09-20 and permanently w:copylefted it under Creative Commons Attribution-NoDerivatives (CC-BY-ND).

This new counter-measure needs to be rolled out to humans to protect humans against the fake human-like voices.

Below is an exact copy of the original article from the SSF! wordpress titled "Amazing method and results from University of Florida scientists in 2022 against the menaces of digital sound-alikes / audio deepfakes". Thank you to the original writers for having the wisdom of licensing the article under CC-BY-ND.

On known history of digital sound-alikes[edit | edit source]

The first English speaking digital sound-alikes were first introduced in 2016 by Adobe and Deepmind, but neither of them were made publicly available.

Then in 2018 at the w:Conference on Neural Information Processing Systems (NeurIPS) the work 'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis' (at arXiv.org) was presented. The pre-trained model is able to steal voices from a sample of only 5 seconds with almost convincing results

The Iframe below is transcluded from 'Audio samples from "Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis"' at google.gituhub.io, the audio samples of a sound-like-anyone machine presented as at the 2018 w:NeurIPS conference by Google researchers.

Have a listen.

Observe how good the "VCTK p240" system is at deceiving to think that it is a person that is doing the talking.

Reporting on the sound-like-anyone-machines

- "Artificial Intelligence Can Now Copy Your Voice: What Does That Mean For Humans?" May 2019 reporting at forbes.com on w:Baidu Research'es attempt at the sound-like-anyone-machine demonstrated at the 2018 w:NeurIPS conference.

The to the right video 'This AI Clones Your Voice After Listening for 5 Seconds' by '2 minute papers' at YouTube describes the voice thieving machine presented by Google Research in w:NeurIPS 2018.

Documented crimes with digital sound-alikes[edit | edit source]

In 2019 reports of crimes being committed with digital sound-alikes started surfacing. As of Jan 2022 no reports of other types of attack than fraud have been found.

2019 digital sound-alike enabled fraud[edit | edit source]