Main Page

When the camera does not exist, but the subject being imaged with a simulation of a (movie) camera deceives the watcher to believe it is some living or dead person it is a digital look-alike.

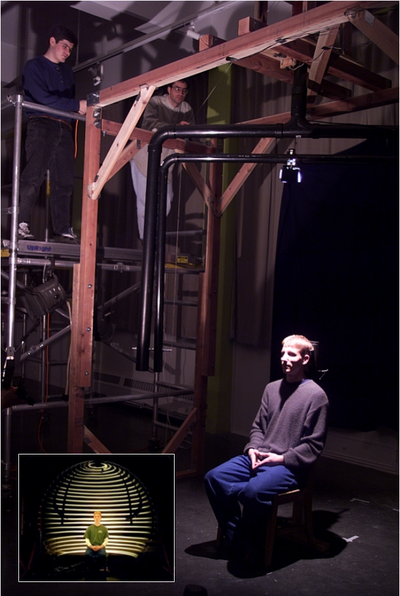

It consists of two w:rotary axes with w:height and w:radius control. A w:light source and a w:polarizer in front of the light source were placed on one arm and a camera and the other polarizer on the other arm. See picture below, for what they did to the captured reflection.

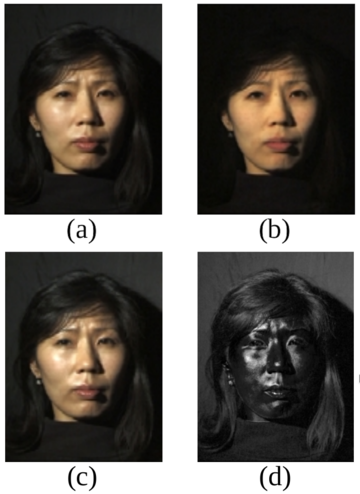

Original image by Debevec et al. – Copyright ACM 2000 – https://dl.acm.org/citation.cfm?doid=311779.344855 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

(a) Normal image in dot lighting

(b) Image of the diffuse reflection which is caught by placing a vertical polarizer in front of the light source and a horizontal in the front the camera

(c) Image of the highlight specular reflection which is caught by placing both polarizers vertically

(d) Subtraction of c from b, which yields the specular component

Images are scaled to seem to be the same luminosity.

Original image by Debevec et al. – Copyright ACM 2000 – https://dl.acm.org/citation.cfm?doid=311779.344855 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

In 2017-2018 this started to be referred to as w:deepfake, even though altering video footage of humans with a computer with a deceiving effect is actually 20 yrs older than the name "deep fakes" or "deepfakes".[1][2]

When it cannot be determined by human testing or media forensics whether some fake voice is a synthetic fake of some person's voice, or is it an actual recording made of that person's actual real voice, it is a pre-recorded digital sound-alike. This is now commonly referred to as w:audio deepfake.

Real-time digital look-and-sound-alike in a video call was used to defraud a substantial amount of money in 2023.[3]

Welcome to Stop Synthetic Filth! wiki, an open w:non-profit w:copylefted w:public service announcement wiki which contains no pornography and that anybody can edit, about discovering ways of stopping and minimizing the damage from naked synthetic human-like fakes i.e digital look-alikes and digital sound-alikes that result from covert modeling i.e. thieving of the human appearance and of the naked human voice.

Adequate Porn Watcher AI (concept) #SSF! wiki proposed countermeasure to synthetic porn: Adequate Porn Watcher AI is a 2019 concept for an AI to protect the humans against synthetic filth attacks by looking for porn that should not be.

How to protect yourself and others from covert modeling

Biblical explanation - The books of Daniel and Revelation, wherein Daniel 7 and Revelation 13 we are warned of this age of industrial filth, is for those who believe in Jesus.

Mediatheque | Glossary | Resources | Marketing against synthetic filth | Current and possible laws and their application#Law proposals | Current laws and their application | Atheist explanation | About this wiki | Scratchpad for quick drafting

As of 28 January 2026 the wiki has 23 articles with 4,681 edits.

Synopses of SSF! wiki in other languages in wordpress

Stop Synthetic Filth! wordpress in English #SSF!

Stop Synthetic Filth! wordpress in English #SSF! Arrêtons les saletés synthétiques! accueil en français #ASS!

Arrêtons les saletés synthétiques! accueil en français #ASS! Stoppi synteettiselle saastalle! kotisivu suomeksi #SSS!

Stoppi synteettiselle saastalle! kotisivu suomeksi #SSS! Stoppa syntetisk orenhet! hemsida på svenska #SSO!

Stoppa syntetisk orenhet! hemsida på svenska #SSO! Stopp sünteetisele saastale! koduleht eesti keeles #SSS!

Stopp sünteetisele saastale! koduleht eesti keeles #SSS!

#SSF!'s predecessor domain is ![]() Ban-Covert-Modeling.org #BCM!. It will expire on Wednesday 2029-03-14, unless renewed.

Ban-Covert-Modeling.org #BCM!. It will expire on Wednesday 2029-03-14, unless renewed.

Get in touch

- Stop Synthetic Filth is stop_synthetic_filth_org@d.consumium.org on diaspora*

- Stop Synthetic Filth is @stopsyntheticf on twitter

Introduction Since the early 00's it has become (nearly) impossible to determine in still or moving pictures what is an image of a human, imaged with a (movie) camera and what on the other hand is a simulation of an image of a human imaged with a simulation of a camera. When there is no camera and the target being imaged with a simulation looks deceptively like some real human, dead or living, it is a digital look-alike.

Now in the late 2010's the equivalent thing is happening to our voices i.e. they can be stolen to some extent with the 2016 prototypes like w:Adobe Inc.'s w:Adobe Voco and w:Google's w:DeepMind w:WaveNet and made to say anything. When it is not possible to determine with human testing or testing with technological means what is a recording of some living or dead person's real voice and what is a simulation it is a digital sound-alike. 2018 saw the publication of Google Research's sound-like-anyone machine (transcluded below) at the w:NeurIPS conference and by the end of 2019 Symantec research had learned of 3 cases where digital sound-alike technology had been used for crimes.[4]

Therefore it is high time to act and to build the Adequate Porn Watcher AI (concept) to protect humanity from visual synthetic filth and to criminalize covert modeling of the naked human voice and synthesis from a covert voice model!

Covert modeling poses growing threats to

- The right to be the only one that looks like me (compromised by digital look-alikes)

- The right to be the only one able to make recordings that sound like me (compromised by digital sound-alikes)

And these developments have various severe effects on the right to privacy, provability by audio and video evidence and deniability.

Thank yous for tech

-

.. but it has always helped w:Linux to be.

-

mw:MediaWiki is the medium of choice.

-

Served by w:Apache HTTP Server httpd.apache.org.

-

Choice of RDBMS is w:MariaDB, a fork back by the original people behind MySQL mariadb.org.

-

Run on copyleft w:Debian GNU/Linux Stable-branch servers debian.org.

References

- ↑ Boháček, Matyáš; Farid, Hany (2022-11-23). "Protecting world leaders against deep fakes using facial, gestural, and vocal mannerisms". w:Proceedings of the National Academy of Sciences of the United States of America. 119 (48). doi:10.1073/pnas.221603511. Retrieved 2023-01-05.

- ↑ Bregler, Christoph; Covell, Michele; Slaney, Malcolm (1997-08-03). "Video Rewrite: Driving Visual Speech with Audio" (PDF). SIGGRAPH '97: Proceedings of the 24th annual conference on Computer graphics and interactive techniques: 353–360. doi:10.1145/258734.258880. Retrieved 2022-09-09.

- ↑ "'Deepfake' scam in China fans worries over AI-driven fraud". w:Reuters.com. w:Reuters. 2023-05-22. Retrieved 2023-06-05.

- ↑

Drew, Harwell (2020-04-16). "An artificial-intelligence first: Voice-mimicking software reportedly used in a major theft". w:washingtonpost.com. w:Washington Post. Retrieved 2021-01-23.

Thieves used voice-mimicking software to imitate a company executive’s speech and dupe his subordinate into sending hundreds of thousands of dollars to a secret account, the company’s insurer said